Control Systems/Print version

| This is the print version of Control Systems You won't see this message or any elements not part of the book's content when you print or preview this page. |

The Wikibook of automatic

And Control Systems Engineering

With

Classical and Modern Techniques

And

Advanced Concepts

Preface

This book will discuss the topic of Control Systems, which is an interdisciplinary engineering topic. Methods considered here will consist of both "Classical" control methods, and "Modern" control methods. Also, discretely sampled systems (digital/computer systems) will be considered in parallel with the more common analog methods. This book will not focus on any single engineering discipline (electrical, mechanical, chemical, etc.), although readers should have a solid foundation in the fundamentals of at least one discipline.

This book will require prior knowledge of linear algebra, integral and differential calculus, and at least some exposure to ordinary differential equations. In addition, a prior knowledge of integral transforms, specifically the Laplace and Z transforms will be very beneficial. Also, prior knowledge of the Fourier Transform will shed more light on certain subjects. Wikibooks with information on calculus topics or transformation topics required for this book will be listed below:

Table of Contents

Table of Contents

Special Pages

| Print Version: | Full Print version () |

Warning: Print version is over 230 pages long as of 10 Feb, 2014. |

| PDF Version: | PDF Version | Warning: PDF version is over 5.4MB, as of 21 Jan, 2014. |

| Cover Page: | Cover Page | Cover Image |

| All Pages: | Page Listing | All Versions |

| Book Policy: | Policy | Local Manual of Style |

| Search This Book: | (links to an external site) |

Controls Introduction

Classical Control Methods

Modern Control Methods

- State-Space Equations

- Linear System Solutions

- Time-Variant System Solutions

- Digital State-Space

- Eigenvalues and Eigenvectors

- Standard Forms

- MIMO Systems

- Realizations

System Representation

Stability

- Stability

- State-Space Stability

- Discrete-Time Stability

- Routh-Hurwitz Criterion

- Jury's Test

- Root Locus

- Nyquist Stability Criterion

Controllers and Compensators

- Controllability and Observability

- System Specifications

- State Feedback

- Estimators and Observers

- Eigenvalue Assignment for MIMO Systems

- Controllers and Compensators

- Polynomial Design

Adaptive Control

Nonlinear Systems

Noisy Systems

Introduction to Digital Controls

- Digital Control Systems

- Discrete-Time Stability

- System Delays

- Sampled Data Systems

- Z Transform Mappings

Linear Matrix Inequalities in Control

Examples

Appendices

- Physical Models

- Transforms

- System Representations

- Matrix Operations

- Using MATLAB ®

- Using Octave

- Using Julia

- Using Scilab

- Using Python

Resources, Glossary, and License

Introduction to Control Systems

What are control systems? Why do we study them? How do we identify them? The chapters in this section should answer these questions and more.

Introduction

This Wikibook

This book was written at Wikibooks, a free online community where people write open-content textbooks. Any person with internet access is welcome to participate in the creation and improvement of this book. Because this book is continuously evolving, there are no finite "versions" or "editions" of this book. Permanent links to known good versions of the pages may be provided.

What are Control Systems?

The study and design of automatic Control Systems, a field known as control engineering, has become important in modern technical society. From devices as simple as a toaster or a toilet, to complex machines like space shuttles and power steering, control engineering is a part of our everyday life. This book introduces the field of control engineering and explores some of the more advanced topics in the field. Note, however, that control engineering is a very large field and this book serves only as a foundation of control engineering and an introduction to selected advanced topics in the field. Topics in this book are added at the discretion of the authors and represent the available expertise of our contributors.

Control systems are components that are added to other components to increase functionality or meet a set of design criteria. For example:

We have a particular electric motor that is supposed to turn at a rate of 40 RPM. To achieve this speed, we must supply 10 Volts to the motor terminals. However, with 10 volts supplied to the motor at rest, it takes 30 seconds for our motor to get up to speed. This is valuable time lost.

This simple example can be complex to both users and designers of the motor system. It may seem obvious that the motor should start at a higher voltage so that it accelerates faster. Then we can reduce the supply back down to 10 volts once it reaches ideal speed.

This is clearly a simplistic example but it illustrates an important point: we can add special "Controller units" to preexisting systems to improve performance and meet new system specifications.

Here are some formal definitions of terms used throughout this book:

- Control System

- A Control System is a device, or a collection of devices that manage the behavior of other devices. Some devices are not controllable. A control system is an interconnection of components connected or related in such a manner as to command, direct, or regulate itself or another system.

Control System is a conceptual framework for designing systems with capabilities of regulation and/or tracking to give a desired performance. For this there must be a set of signals measurable to know the performance, another set of signals measurable to influence the evolution of the system in time and a third set which is not measurable but disturb the evolution.

- Controller

- A controller is a control system that manages the behavior of another device or system (using Actuators). The controller is usually fed with some input signal from outside the system which commands the system to provide desired output. In a closed loop system, the signal is preprocessed with the sensor's signal from inside the system.

- Actuator

- An actuator is a device that takes in a signal form the controller and carries some action to affect the system accordingly.

- Compensator

- A compensator is a control system that regulates another system, usually by conditioning the input or the output to that system. Compensators are typically employed to correct a single design flaw with the intention of minimizing effects on other aspects of the design.

There are essentially two methods to approach the problem of designing a new control system: the Classical Approach and the Modern Approach.

Classical and Modern

Classical and Modern control methodologies are named in a misleading way, because the group of techniques called "Classical" were actually developed later than the techniques labeled "Modern". However, in terms of developing control systems, Modern methods have been used to great effect more recently, while the Classical methods have been gradually falling out of favor. Most recently, it has been shown that Classical and Modern methods can be combined to highlight their respective strengths and weaknesses.

Classical Methods, which this book will consider first, are methods involving the Laplace Transform domain. Physical systems are modeled in the so-called "time domain", where the response of a given system is a function of the various inputs, the previous system values, and time. As time progresses, the state of the system and its response change. However, time-domain models for systems are frequently modeled using high-order differential equations which can become impossibly difficult for humans to solve and some of which can even become impossible for modern computer systems to solve efficiently. To counteract this problem, integral transforms, such as the Laplace Transform and the Fourier Transform, can be employed to change an Ordinary Differential Equation (ODE) in the time domain into a regular algebraic polynomial in the transform domain. Once a given system has been converted into the transform domain it can be manipulated with greater ease and analyzed quickly by humans and computers alike.

Modern Control Methods, instead of changing domains to avoid the complexities of time-domain ODE mathematics, converts the differential equations into a system of lower-order time domain equations called State Equations, which can then be manipulated using techniques from linear algebra. This book will consider Modern Methods second.

A third distinction that is frequently made in the realm of control systems is to divide analog methods (classical and modern, described above) from digital methods. Digital Control Methods were designed to try and incorporate the emerging power of computer systems into previous control methodologies. A special transform, known as the Z-Transform, was developed that can adequately describe digital systems, but at the same time can be converted (with some effort) into the Laplace domain. Once in the Laplace domain, the digital system can be manipulated and analyzed in a very similar manner to Classical analog systems. For this reason, this book will not make a hard and fast distinction between Analog and Digital systems, and instead will attempt to study both paradigms in parallel.

Who is This Book For?

This book is intended to accompany a course of study in under-graduate and graduate engineering. As has been mentioned previously, this book is not focused on any particular discipline within engineering, however any person who wants to make use of this material should have some basic background in the Laplace transform (if not other transforms), calculus, etc. The material in this book may be used to accompany several semesters of study, depending on the program of your particular college or university. The study of control systems is generally a topic that is reserved for students in their 3rd or 4th year of a 4 year undergraduate program, because it requires so much previous information. Some of the more advanced topics may not be covered until later in a graduate program.

Many colleges and universities only offer one or two classes specifically about control systems at the undergraduate level. Some universities, however, do offer more than that, depending on how the material is broken up, and how much depth that is to be covered. Also, many institutions will offer a handful of graduate-level courses on the subject. This book will attempt to cover the topic of control systems from both a graduate and undergraduate level, with the advanced topics built on the basic topics in a way that is intuitive. As such, students should be able to begin reading this book in any place that seems an appropriate starting point, and should be able to finish reading where further information is no longer needed.

What are the Prerequisites?

Understanding of the material in this book will require a solid mathematical foundation. This book does not currently explain, nor will it ever try to fully explain most of the necessary mathematical tools used in this text. For that reason, the reader is expected to have read the following wikibooks, or have background knowledge comparable to them:

- Algebra

- Calculus

- The reader should have a good understanding of differentiation and integration. Partial differentiation, multiple integration, and functions of multiple variables will be used occasionally, but the students are not necessarily required to know those subjects well. These advanced calculus topics could better be treated as a co-requisite instead of a pre-requisite.

- Linear Algebra

- State-space system representation draws heavily on linear algebra techniques. Students should know how to operate on matrices. Students should understand basic matrix operations (addition, multiplication, determinant, inverse, transpose). Students would also benefit from a prior understanding of Eigenvalues and Eigenvectors, but those subjects are covered in this text.

- Ordinary Differential Equations

- All linear systems can be described by a linear ordinary differential equation. It is beneficial, therefore, for students to understand these equations. Much of this book describes methods to analyze these equations. Students should know what a differential equation is, and they should also know how to find the general solutions of first and second order ODEs.

- Engineering Analysis

- This book reinforces many of the advanced mathematical concepts used in the Engineering Analysis book, and we will refer to the relevant sections in the aforementioned text for further information on some subjects. This is essentially a math book, but with a focus on various engineering applications. It relies on a previous knowledge of the other math books in this list.

- Signals and Systems

- The Signals and Systems book will provide a basis in the field of systems theory, of which control systems is a subset. Readers who have not read the Signals and Systems book will be at a severe disadvantage when reading this book.

How is this Book Organized?

This book will be organized following a particular progression. First this book will discuss the basics of system theory, and it will offer a brief refresher on integral transforms. Section 2 will contain a brief primer on digital information, for students who are not necessarily familiar with them. This is done so that digital and analog signals can be considered in parallel throughout the rest of the book. Next, this book will introduce the state-space method of system description and control. After section 3, topics in the book will use state-space and transform methods interchangeably (and occasionally simultaneously). It is important, therefore, that these three chapters be well read and understood before venturing into the later parts of the book.

After the "basic" sections of the book, we will delve into specific methods of analyzing and designing control systems. First we will discuss Laplace-domain stability analysis techniques (Routh-Hurwitz, root-locus), and then frequency methods (Nyquist Criteria, Bode Plots). After the classical methods are discussed, this book will then discuss Modern methods of stability analysis. Finally, a number of advanced topics will be touched upon, depending on the knowledge level of the various contributors.

As the subject matter of this book expands, so too will the prerequisites. For instance, when this book is expanded to cover nonlinear systems, a basic background knowledge of nonlinear mathematics will be required.

Versions

This wikibook has been expanded to include multiple versions of its text, differentiated by the material covered, and the order in which the material is presented. Each different version is composed of the chapters of this book, included in a different order. This book covers a wide range of information, so if you don't need all the information that this book has to offer, perhaps one of the other versions would be right for you and your educational needs.

Each separate version has a table of contents outlining the different chapters that are included in that version. Also, each separate version comes complete with a printable version, and some even come with PDF versions as well.

Take a look at the All Versions Listing Page to find the version of the book that is right for you and your needs.

Differential Equations Review

Implicit in the study of control systems is the underlying use of differential equations. Even if they aren't visible on the surface, all of the continuous-time systems that we will be looking at are described in the time domain by ordinary differential equations (ODE), some of which are relatively high-order.

Let's review some differential equation basics. Consider the topic of interest from a bank. The amount of interest accrued on a given principal balance (the amount of money you put into the bank) P, is given by:

Where is the interest (rate of change of the principal), and r is the interest rate. Notice in this case that P is a function of time (t), and can be rewritten to reflect that:

To solve this basic, first-order equation, we can use a technique called "separation of variables", where we move all instances of the letter P to one side, and all instances of t to the other:

And integrating both sides gives us:

This is all fine and good, but generally, we like to get rid of the logarithm, by raising both sides to a power of e:

Where we can separate out the constant as such:

D is a constant that represents the initial conditions of the system, in this case the starting principal.

Differential equations are particularly difficult to manipulate, especially once we get to higher-orders of equations. Luckily, several methods of abstraction have been created that allow us to work with ODEs, but at the same time, not have to worry about the complexities of them. The classical method, as described above, uses the Laplace, Fourier, and Z Transforms to convert ODEs in the time domain into polynomials in a complex domain. These complex polynomials are significantly easier to solve than the ODE counterparts. The Modern method instead breaks differential equations into systems of low-order equations, and expresses this system in terms of matrices. It is a common precept in ODE theory that an ODE of order N can be broken down into N equations of order 1.

Readers who are unfamiliar with differential equations might be able to read and understand the material in this book reasonably well. However, all readers are encouraged to read the related sections in Calculus.

History

The field of control systems started essentially in the ancient world. Early civilizations, notably the Greeks and the Arabs were heavily preoccupied with the accurate measurement of time, the result of which were several "water clocks" that were designed and implemented.

However, there was very little in the way of actual progress made in the field of engineering until the beginning of the renaissance in Europe. Leonhard Euler (for whom Euler's Formula is named) discovered a powerful integral transform, but Pierre-Simon Laplace used the transform (later called the Laplace Transform) to solve complex problems in probability theory.

Joseph Fourier was a court mathematician in France under Napoleon I. He created a special function decomposition called the Fourier Series, that was later generalized into an integral transform, and named in his honor (the Fourier Transform).

|

|

| Pierre-Simon Laplace 1749-1827 |

Joseph Fourier 1768-1840 |

The "golden age" of control engineering occurred between 1910-1945, where mass communication methods were being created and two world wars were being fought. During this period, some of the most famous names in controls engineering were doing their work: Nyquist and Bode.

Hendrik Wade Bode and Harry Nyquist, especially in the 1930's while working with Bell Laboratories, created the bulk of what we now call "Classical Control Methods". These methods were based off the results of the Laplace and Fourier Transforms, which had been previously known, but were made popular by Oliver Heaviside around the turn of the century. Previous to Heaviside, the transforms were not widely used, nor respected mathematical tools.

Bode is credited with the "discovery" of the closed-loop feedback system, and the logarithmic plotting technique that still bears his name (bode plots). Harry Nyquist did extensive research in the field of system stability and information theory. He created a powerful stability criteria that has been named for him (The Nyquist Criteria).

Modern control methods were introduced in the early 1950's, as a way to bypass some of the shortcomings of the classical methods. Rudolf Kalman is famous for his work in modern control theory, and an adaptive controller called the Kalman Filter was named in his honor. Modern control methods became increasingly popular after 1957 with the invention of the computer, and the start of the space program. Computers created the need for digital control methodologies, and the space program required the creation of some "advanced" control techniques, such as "optimal control", "robust control", and "nonlinear control". These last subjects, and several more, are still active areas of study among research engineers.

Branches of Control Engineering

Here we are going to give a brief listing of the various different methodologies within the sphere of control engineering. Oftentimes, the lines between these methodologies are blurred, or even erased completely.

- Classical Controls

- Control methodologies where the ODEs that describe a system are transformed using the Laplace, Fourier, or Z Transforms, and manipulated in the transform domain.

- Modern Controls

- Methods where high-order differential equations are broken into a system of first-order equations. The input, output, and internal states of the system are described by vectors called "state variables".

- Robust Control

- Control methodologies where arbitrary outside noise/disturbances are accounted for, as well as internal inaccuracies caused by the heat of the system itself, and the environment.

- Optimal Control

- In a system, performance metrics are identified, and arranged into a "cost function". The cost function is minimized to create an operational system with the lowest cost.

- Adaptive Control

- In adaptive control, the control changes its response characteristics over time to better control the system.

- Nonlinear Control

- The youngest branch of control engineering, nonlinear control encompasses systems that cannot be described by linear equations or ODEs, and for which there is often very little supporting theory available.

- Game Theory

- Game Theory is a close relative of control theory, and especially robust control and optimal control theories. In game theory, the external disturbances are not considered to be random noise processes, but instead are considered to be "opponents". Each player has a cost function that they attempt to minimize, and that their opponents attempt to maximize.

This book will definitely cover the first two branches, and will hopefully be expanded to cover some of the later branches, if time allows.

MATLAB

the Appendix

MATLAB ® is a programming tool that is commonly used in the field of control engineering. We will discuss MATLAB in specific sections of this book devoted to that purpose. MATLAB will not appear in discussions outside these specific sections, although MATLAB may be used in some example problems. An overview of the use of MATLAB in control engineering can be found in the appendix at: Control Systems/MATLAB.

For more information on MATLAB in general, see: MATLAB Programming.

Resources

Nearly all textbooks on the subject of control systems, linear systems, and system analysis will use MATLAB as an integral part of the text. Students who are learning this subject at an accredited university will certainly have seen this material in their textbooks, and are likely to have had MATLAB work as part of their classes. It is from this perspective that the MATLAB appendix is written.

In the future, this book may be expanded to include information on Simulink ®, as well as MATLAB.

There are a number of other software tools that are useful in the analysis and design of control systems. Additional information can be added in the appendix of this book, depending on the experience and prior knowledge of contributors.

About Formatting

This book will use some simple conventions throughout.

Mathematical Conventions

Mathematical equations will be labeled with the {{eqn}} template, to give them names. Equations that are labeled in such a manner are important, and should be taken special note of. For instance, notice the label to the right of this equation:

[Inverse Laplace Transform]

Equations that are named in this manner will also be copied into the List of Equations Glossary in the end of the book, for an easy reference.

Italics will be used for English variables, functions, and equations that appear in the main text. For example e, j, f(t) and X(s) are all italicized. Wikibooks contains a LaTeX mathematics formatting engine, although an attempt will be made not to employ formatted mathematical equations inline with other text because of the difference in size and font. Greek letters, and other non-English characters will not be italicized in the text unless they appear in the midst of multiple variables which are italicized (as a convenience to the editor).

Scalar time-domain functions and variables will be denoted with lower-case letters, along with a t in parenthesis, such as: x(t), y(t), and h(t). Discrete-time functions will be written in a similar manner, except with an [n] instead of a (t).

Fourier, Laplace, Z, and Star transformed functions will be denoted with capital letters followed by the appropriate variable in parenthesis. For example: F(s), X(jω), Y(z), and F*(s).

Matrices will be denoted with capital letters. Matrices which are functions of time will be denoted with a capital letter followed by a t in parenthesis. For example: A(t) is a matrix, a(t) is a scalar function of time.

Transforms of time-variant matrices will be displayed in uppercase bold letters, such as H(s).

Math equations rendered using LaTeX will appear on separate lines, and will be indented from the rest of the text.

Text Conventions

Examples will appear in TextBox templates, which show up as large grey boxes filled with text and equations.

- Important Definitions

- Will appear in TextBox templates as well, except we will use this formatting to show that it is a definition.

Notes of interest will appear in "infobox" templates. These notes will often be used to explain some nuances of a mathematical derivation or proof. |

Warnings will appear in these "warning" boxes. These boxes will point out common mistakes, or other items to be careful of. |

System Identification

Control Systems/System Identifications

Digitally and Analogy

Control Systems/Digital and Analogy

System Metrics

System Metrics

When a system is being designed and analyzed, it doesn't make any sense to test the system with all manner of strange input functions, or to measure all sorts of arbitrary performance metrics. Instead, it is in everybody's best interest to test the system with a set of standard, simple reference functions. Once the system is tested with the reference functions, there are a number of different metrics that we can use to determine the system performance.

It is worth noting that the metrics presented in this chapter represent only a small number of possible metrics that can be used to evaluate a given system. This wikibook will present other useful metrics along the way, as their need becomes apparent.

Standard Inputs

All of the standard inputs are zero before time zero. All the standard inputs are causal.

There are a number of standard inputs that are considered simple enough and universal enough that they are considered when designing a system. These inputs are known as a unit step, a ramp, and a parabolic input.

- Unit Step

- A unit step function is defined piecewise as such:

[Unit Step Function]

- The unit step function is a highly important function, not only in control systems engineering, but also in signal processing, systems analysis, and all branches of engineering. If the unit step function is input to a system, the output of the system is known as the step response. The step response of a system is an important tool, and we will study step responses in detail in later chapters.

- Ramp

- A unit ramp is defined in terms of the unit step function, as such:

[Unit Ramp Function]

- It is important to note that the unit step function is simply the differential of the unit ramp function:

- This definition will come in handy when we learn about the Laplace Transform.

- Parabolic

- A unit parabolic input is similar to a ramp input:

[Unit Parabolic Function]

- Notice also that the unit parabolic input is equal to the integral of the ramp function:

- Again, this result will become important when we learn about the Laplace Transform.

Also, sinusoidal and exponential functions are considered basic, but they are too difficult to use in initial analysis of a system.

Steady State

To be more precise, we should have taken the limit as t approaches infinity. However, as a shorthand notation, we will typically say "t equals infinity", and assume the reader understands the shortcut that is being used.

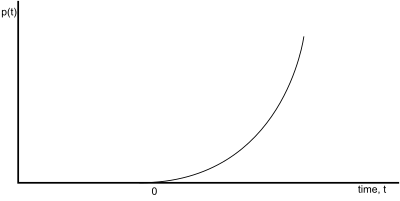

When a unit-step function is input to a system, the steady-state value of that system is the output value at time . Since it is impractical (if not completely impossible) to wait till infinity to observe the system, approximations and mathematical calculations are used to determine the steady-state value of the system. Most system responses are asymptotic, that is that the response approaches a particular value. Systems that are asymptotic are typically obvious from viewing the graph of that response.

Step Response

The step response of a system is most frequently used to analyze systems, and there is a large amount of terminology involved with step responses. When exposed to the step input, the system will initially have an undesirable output period known as the transient response. The transient response occurs because a system is approaching its final output value. The steady-state response of the system is the response after the transient response has ended.

The amount of time it takes for the system output to reach the desired value (before the transient response has ended, typically) is known as the rise time. The amount of time it takes for the transient response to end and the steady-state response to begin is known as the settling time.

It is common for a systems engineer to try and improve the step response of a system. In general, it is desired for the transient response to be reduced, the rise and settling times to be shorter, and the steady-state to approach a particular desired "reference" output.

|

|

Target Value

The target output value is the value that our system attempts to obtain for a given input. This is not the same as the steady-state value, which is the actual value that the system does obtain. The target value is frequently referred to as the reference value, or the "reference function" of the system. In essence, this is the value that we want the system to produce. When we input a "5" into an elevator, we want the output (the final position of the elevator) to be the fifth floor. Pressing the "5" button is the reference input, and is the expected value that we want to obtain. If we press the "5" button, and the elevator goes to the third floor, then our elevator is poorly designed.

Rise Time

Rise time is the amount of time that it takes for the system response to reach the target value from an initial state of zero. Many texts on the subject define the rise time as being the time it takes to rise between the initial position and 80% of the target value. This is because some systems never rise to 100% of the expected, target value, and therefore they would have an infinite rise-time. This book will specify which convention to use for each individual problem. Rise time is typically denoted tr, or trise.

Rise time is not the amount of time it takes to achieve steady-state, only the amount of time it takes to reach the desired target value for the first time. |

Percent Overshoot

Underdamped systems frequently overshoot their target value initially. This initial surge is known as the "overshoot value". The ratio of the amount of overshoot to the target steady-state value of the system is known as the percent overshoot. Percent overshoot represents an overcompensation of the system, and can output dangerously large output signals that can damage a system. Percent overshoot is typically denoted with the term PO.

Example: Refrigerator

Consider an ordinary household refrigerator. The refrigerator has cycles where it is on and when it is off. When the refrigerator is on, the coolant pump is running, and the temperature inside the refrigerator decreases. The temperature decreases to a much lower level than is required, and then the pump turns off.

When the pump is off, the temperature slowly increases again as heat is absorbed into the refrigerator. When the temperature gets high enough, the pump turns back on. Because the pump cools down the refrigerator more than it needs to initially, we can say that it "overshoots" the target value by a certain specified amount.

Example: Refrigerator

Another example concerning a refrigerator concerns the electrical demand of the heat pump when it first turns on. The pump is an inductive mechanical motor, and when the motor first activates, a special counter-acting force known as "back EMF" resists the motion of the motor, and causes the pump to draw more electricity until the motor reaches its final speed. During the startup time for the pump, lights on the same electrical circuit as the refrigerator may dim slightly, as electricity is drawn away from the lamps, and into the pump. This initial draw of electricity is a good example of overshoot.

Steady-State Error

Sometimes a system might never achieve the desired steady-state value, but instead will settle on an output value that is not desired. The difference between the steady-state output value to the reference input value at steady state is called the steady-state error of the system. We will use the variable ess to denote the steady-state error of the system.

Settling Time

After the initial rise time of the system, some systems will oscillate and vibrate for an amount of time before the system output settles on the final value. The amount of time it takes to reach steady state after the initial rise time is known as the settling time. Notice that damped oscillating systems may never settle completely, so we will define settling time as being the amount of time for the system to reach, and stay in, a certain acceptable range. The acceptable range for settling time is typically determined on a per-problem basis, although common values are 20%, 10%, or 5% of the target value. The settling time will be denoted as ts.

System Order

The order of the system is defined by the number of independent energy storage elements in the system, and intuitively by the highest order of the linear differential equation that describes the system. In a transfer function representation, the order is the highest exponent in the transfer function. In a proper system, the system order is defined as the degree of the denominator polynomial. In a state-space equation, the system order is the number of state-variables used in the system. The order of a system will frequently be denoted with an n or N, although these variables are also used for other purposes. This book will make clear distinction on the use of these variables.

Proper Systems

A proper system is a system where the degree of the denominator is larger than or equal to the degree of the numerator polynomial. A strictly proper system is a system where the degree of the denominator polynomial is larger than (but never equal to) the degree of the numerator polynomial. A biproper system is a system where the degree of the denominator polynomial equals the degree of the numerator polynomial.

It is important to note that only proper systems can be physically realized. In other words, a system that is not proper cannot be built. It makes no sense to spend a lot of time designing and analyzing imaginary systems.

Example: System Order

Find the order of this system:

The highest exponent in the denominator is s2, so the system is order 2. Also, since the denominator is a higher degree than the numerator, this system is strictly proper.

In the above example, G(s) is a second-order transfer function because in the denominator one of the s variables has an exponent of 2. Second-order functions are the easiest to work with.

System Type

Let's say that we have a process transfer function (or combination of functions, such as a controller feeding in to a process), all in the forward branch of a unity feedback loop. Say that the overall forward branch transfer function is in the following generalized form (known as pole-zero form):

[Pole-Zero Form]

we call the parameter M the system type. Note that increased system type number correspond to larger numbers of poles at s = 0. More poles at the origin generally have a beneficial effect on the system, but they increase the order of the system, and make it increasingly difficult to implement physically. System type will generally be denoted with a letter like N, M, or m. Because these variables are typically reused for other purposes, this book will make clear distinction when they are employed.

Now, we will define a few terms that are commonly used when discussing system type. These new terms are Position Error, Velocity Error, and Acceleration Error. These names are throwbacks to physics terms where acceleration is the derivative of velocity, and velocity is the derivative of position. Note that none of these terms are meant to deal with movement, however.

- Position Error

- The position error, denoted by the position error constant . This is the amount of steady-state error of the system when stimulated by a unit step input. We define the position error constant as follows:

[Position Error Constant]

- Where G(s) is the transfer function of our system.

- Velocity Error

- The velocity error is the amount of steady-state error when the system is stimulated with a ramp input. We define the velocity error constant as such:

[Velocity Error Constant]

- Acceleration Error

- The acceleration error is the amount of steady-state error when the system is stimulated with a parabolic input. We define the acceleration error constant to be:

[Acceleration Error Constant]

Now, this table will show briefly the relationship between the system type, the kind of input (step, ramp, parabolic), and the steady-state error of the system:

Unit System Input Type, M Au(t) Ar(t) Ap(t) 0 1 2 > 2

Z-Domain Type

Likewise, we can show that the system order can be found from the following generalized transfer function in the Z domain:

Where the constant M is the type of the digital system. Now, we will show how to find the various error constants in the Z-Domain:

[Z-Domain Error Constants]

Error Constant Equation Kp Kv Ka

Visually

Here is an image of the various system metrics, acting on a system in response to a step input:

The target value is the value of the input step response. The rise time is the time at which the waveform first reaches the target value. The overshoot is the amount by which the waveform exceeds the target value. The settling time is the time it takes for the system to settle into a particular bounded region. This bounded region is denoted with two short dotted lines above and below the target value.

System Modeling

The Control Process

It is the job of a control engineer to analyze existing systems, and to design new systems to meet specific needs. Sometimes new systems need to be designed, but more frequently a controller unit needs to be designed to improve the performance of existing systems. When designing a system, or implementing a controller to augment an existing system, we need to follow some basic steps:

- Model the system mathematically

- Analyze the mathematical model

- Design system/controller

- Implement system/controller and test

The vast majority of this book is going to be focused on (2), the analysis of the mathematical systems. This chapter alone will be devoted to a discussion of the mathematical modeling of the systems.

External Description

An external description of a system relates the system input to the system output without explicitly taking into account the internal workings of the system. The external description of a system is sometimes also referred to as the Input-Output Description of the system, because it only deals with the inputs and the outputs to the system.

If the system can be represented by a mathematical function h(t, r), where t is the time that the output is observed, and r is the time that the input is applied. We can relate the system function h(t, r) to the input x and the output y through the use of an integral:

[General System Description]

This integral form holds for all linear systems, and every linear system can be described by such an equation.

If a system is causal (i.e. an input at t=r affects system behaviour only for ) and there is no input of the system before t=0, we can change the limits of the integration:

Time-Invariant Systems

If furthermore a system is time-invariant, we can rewrite the system description equation as follows:

This equation is known as the convolution integral, and we will discuss it more in the next chapter.

Every Linear Time-Invariant (LTI) system can be used with the Laplace Transform, a powerful tool that allows us to convert an equation from the time domain into the S-Domain, where many calculations are easier. Time-variant systems cannot be used with the Laplace Transform.

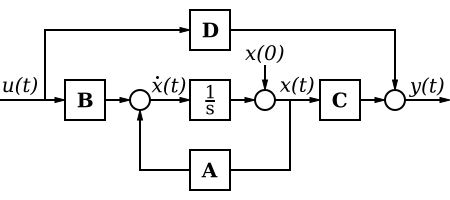

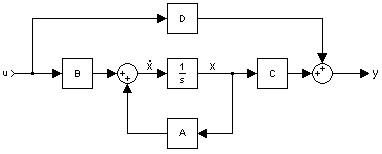

Internal Description

If a system is linear and lumped, it can also be described using a system of equations known as state-space equations. In state-space equations, we use the variable x to represent the internal state of the system. We then use u as the system input, and we continue to use y as the system output. We can write the state-space equations as such:

We will discuss the state-space equations more when we get to the section on modern controls.

Complex Descriptions

Systems which are LTI and Lumped can also be described using a combination of the state-space equations, and the Laplace Transform. If we take the Laplace Transform of the state equations that we listed above, we can get a set of functions known as the Transfer Matrix Functions. We will discuss these functions in a later chapter.

Representations

To recap, we will prepare a table with the various system properties, and the available methods for describing the system:

Properties State-Space

EquationsLaplace

TransformTransfer

MatrixLinear, Time-Variant, Distributed no no no Linear, Time-Variant, Lumped yes no no Linear, Time-Invariant, Distributed no yes no Linear, Time-Invariant, Lumped yes yes yes

We will discuss all these different types of system representation later in the book.

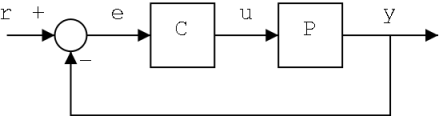

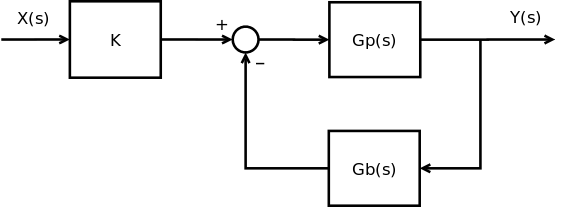

Analysis

Once a system is modeled using one of the representations listed above, the system needs to be analyzed. We can determine the system metrics and then we can compare those metrics to our specification. If our system meets the specifications we are finished with the design process. However if the system does not meet the specifications (as is typically the case), then suitable controllers and compensators need to be designed and added to the system.

Once the controllers and compensators have been designed, the job isn't finished: we need to analyze the new composite system to ensure that the controllers work properly. Also, we need to ensure that the systems are stable: unstable systems can be dangerous.

Frequency Domain

For proposals, early stage designs, and quick turn around analyses a frequency domain model is often superior to a time domain model. Frequency domain models take disturbance PSDs (Power Spectral Densities) directly, use transfer functions directly, and produce output or residual PSDs directly. The answer is a steady-state response. Oftentimes the controller is shooting for 0 so the steady-state response is also the residual error that will be the analysis output or metric for report.

| Input | Model | Output |

|---|---|---|

| PSD | Transfer Function | PSD |

Brief Overview of the Math

Frequency domain modeling is a matter of determining the impulse response of a system to a random process.

where

- is the one-sided input PSD in

- is the frequency response function of the system and

- is the one-sided output PSD or auto power spectral density function.

The frequency response function, , is related to the impulse response function (transfer function) by

Note some texts will state that this is only valid for random processes which are stationary. Other texts suggest stationary and ergodic while still others state weakly stationary processes. Some texts do not distinguish between strictly stationary and weakly stationary. From practice, the rule of thumb is if the PSD of the input process is the same from hour to hour and day to day then the input PSD can be used and the above equation is valid.

Notes

- ↑ Sun, Jian-Qiao (2006). Stochastic Dynamics and Control, Volume 4. Amsterdam: Elsevier Science. ISBN 0444522301.

See a full explanation with example at ControlTheoryPro.com

Modeling Examples

Modeling in Control Systems is oftentimes a matter of judgement. This judgement is developed by creating models and learning from other people's models. ControlTheoryPro.com is a site with a lot of examples. Here are links to a few of them

- Hovering Helicopter Example

- Reaction Torque Cancellation Example

- List of all examples at ControlTheoryPro.com

Manufacture

Once the system has been properly designed we can prototype our system and test it. Assuming our analysis was correct and our design is good, the prototype should work as expected. Now we can move on to manufacture and distribute our completed systems.

print unit page|Classical Controls|The classical method of controls involves analysis and manipulation of systems in the complex frequency domain. This domain, entered into by applying the Laplace or Fourier Transforms, is useful in examining the characteristics of the system, and determining the system response.}}

Transform's

Transforms

There are a number of transforms that we will be discussing throughout this book, and the reader is assumed to have at least a small prior knowledge of them. It is not the intention of this book to teach the topic of transforms to an audience that has had no previous exposure to them. However, we will include a brief refresher here to refamiliarize people who maybe cannot remember the topic perfectly. If you do not know what the Laplace Transform or the Fourier Transform are yet, it is highly recommended that you use this page as a simple guide, and look the information up on other sources. Specifically, Wikipedia has lots of information on these subjects.

Transform Basics

A transform is a mathematical tool that converts an equation from one variable (or one set of variables) into a new variable (or a new set of variables). To do this, the transform must remove all instances of the first variable, the "Domain Variable", and add a new "Range Variable". Integrals are excellent choices for transforms, because the limits of the definite integral will be substituted into the domain variable, and all instances of that variable will be removed from the equation. An integral transform that converts from a domain variable a to a range variable b will typically be formatted as such:

Where the function f(a) is the function being transformed, and g(a,b) is known as the kernel of the transform. Typically, the only difference between the various integral transforms is the kernel.

Laplace Transform

The Laplace Transform converts an equation from the time-domain into the so-called "S-domain", or the Laplace domain, or even the "Complex domain". These are all different names for the same mathematical space and they all may be used interchangeably in this book and in other texts on the subject. The Transform can only be applied under the following conditions:

- The system or signal in question is analog.

- The system or signal in question is Linear.

- The system or signal in question is Time-Invariant.

- The system or signal in question is causal.

The transform is defined as such:

[Laplace Transform]

Laplace transform results have been tabulated extensively. More information on the Laplace transform, including a transform table can be found in the Appendix.

If we have a linear differential equation in the time domain:

With zero initial conditions, we can take the Laplace transform of the equation as such:

And separating, we get:

Inverse Laplace Transform

The inverse Laplace Transform is defined as such:

[Inverse Laplace Transform]

The inverse transform converts a function from the Laplace domain back into the time domain.

Matrices and Vectors

The Laplace Transform can be used on systems of linear equations in an intuitive way. Let's say that we have a system of linear equations:

We can arrange these equations into matrix form, as shown:

And write this symbolically as:

We can take the Laplace transform of both sides:

Which is the same as taking the transform of each individual equation in the system of equations.

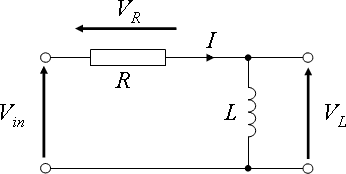

Example: RL Circuit

Circuit Theory

Here, we are going to show a common example of a first-order system, an RL Circuit. In an inductor, the relationship between the current, I, and the voltage, V, in the time domain is expressed as a derivative:

Where L is a special quantity called the "Inductance" that is a property of inductors.

Let's say that we have a 1st order RL series electric circuit. The resistor has resistance R, the inductor has inductance L, and the voltage source has input voltage Vin. The system output of our circuit is the voltage over the inductor, Vout. In the time domain, we have the following first-order differential equations to describe the circuit:

However, since the circuit is essentially acting as a voltage divider, we can put the output in terms of the input as follows:

This is a very complicated equation, and will be difficult to solve unless we employ the Laplace transform:

We can divide top and bottom by L, and move Vin to the other side:

And using a simple table look-up, we can solve this for the time-domain relationship between the circuit input and the circuit output:

Partial Fraction Expansion

Calculus

Laplace transform pairs are extensively tabulated, but frequently we have transfer functions and other equations that do not have a tabulated inverse transform. If our equation is a fraction, we can often utilize Partial Fraction Expansion (PFE) to create a set of simpler terms that will have readily available inverse transforms. This section is going to give a brief reminder about PFE, for those who have already learned the topic. This refresher will be in the form of several examples of the process, as it relates to the Laplace Transform. People who are unfamiliar with PFE are encouraged to read more about it in Calculus.

Example: Second-Order System

If we have a given equation in the S-domain:

We can expand it into several smaller fractions as such:

This looks impossible, because we have a single equation with 3 unknowns (s, A, B), but in reality s can take any arbitrary value, and we can "plug in" values for s to solve for A and B, without needing other equations. For instance, in the above equation, we can multiply through by the denominator, and cancel terms:

Now, when we set s → -2, the A term disappears, and we are left with B → 3. When we set s → -1, we can solve for A → -1. Putting these values back into our original equation, we have:

Remember, since the Laplace transform is a linear operator, the following relationship holds true:

Finding the inverse transform of these smaller terms should be an easier process then finding the inverse transform of the whole function. Partial fraction expansion is a useful, and oftentimes necessary tool for finding the inverse of an S-domain equation.

Example: Fourth-Order System

If we have a given equation in the S-domain:

We can expand it into several smaller fractions as such:

Canceling terms wouldn't be enough here, we will open the brackets (separated onto multiple lines):

Let's compare coefficients:

- A + D = 0

- 30A + C + 20D = 79

- 300A + B + 10C + 100D = 916

- 1000A = 1000

And solving gives us:

- A = 1

- B = 26

- C = 69

- D = -1

We know from the Laplace Transform table that the following relation holds:

We can plug in our values for A, B, C, and D into our expansion, and try to convert it into the form above.

Example: Complex Roots

Given the following transfer function:

When the solution of the denominator is a complex number, we use a complex representation A + iB, like 3+i4 as opposed to the use of a single letter (e.g. D) - which is for real numbers:

- As + B = 7s + 26

- A = 7

- B = 26

We will need to reform it into two fractions that look like this (without changing its value):

- →Failed to parse (SVG (MathML can be enabled via browser plugin): Invalid response ("Math extension cannot connect to Restbase.") from server "http://localhost:6011/en.wikibooks.org/v1/":): {\displaystyle { \omega \over (s+\alpha )^2 + \omega^2 } }

- →

Let's start with the denominator (for both fractions):

The roots of s2 - 80s + 1681 are 40 + j9 and 40 - j9.

- →

And now the numerators:

Inverse Laplace Transform:

Example: Sixth-Order System

Given the following transfer function:

We multiply through by the denominators to make the equation rational:

And then we combine terms:

Comparing coefficients:

- A + B + C = 0

- -15A - 12B - 3C + D = 90

- 73A + 37B - 3D = 0

- -111A = -1110

Now, we can solve for A, B, C and D:

- A = 10

- B = -10

- C = 0

- D = 120

And now for the "fitting":

The roots of s2 - 12s + 37 are 6 + j and 6 - j

No need to fit the fraction of D, because it is complete; no need to bother fitting the fraction of C, because C is equal to zero.

Final Value Theorem

The Final Value Theorem allows us to determine the value of the time domain equation, as the time approaches infinity, from the S domain equation. In Control Engineering, the Final Value Theorem is used most frequently to determine the steady-state value of a system. The real part of the poles of the function must be <0.

[Final Value Theorem (Laplace)]

From our chapter on system metrics, you may recognize the value of the system at time infinity as the steady-state time of the system. The difference between the steady state value and the expected output value we remember as being the steady-state error of the system. Using the Final Value Theorem, we can find the steady-state value and the steady-state error of the system in the Complex S domain.

Example: Final Value Theorem

Find the final value of the following polynomial:

We can apply the Final Value Theorem:

We obtain the value:

Initial Value Theorem

Akin to the final value theorem, the Initial Value Theorem allows us to determine the initial value of the system (the value at time zero) from the S-Domain Equation. The initial value theorem is used most frequently to determine the starting conditions, or the "initial conditions" of a system.

[Initial Value Theorem (Laplace)]

Common Transforms

We will now show you the transforms of the three functions we have already learned about: The unit step, the unit ramp, and the unit parabola. The transform of the unit step function is given by:

And since the unit ramp is the integral of the unit step, we can multiply the above result times 1/s to get the transform of the unit ramp:

Again, we can multiply by 1/s to get the transform of the unit parabola:

Fourier Transform

The Fourier Transform is very similar to the Laplace transform. The fourier transform uses the assumption that any finite time-domain signal can be broken into an infinite sum of sinusoidal (sine and cosine waves) signals. Under this assumption, the Fourier Transform converts a time-domain signal into its frequency-domain representation, as a function of the radial frequency, ω, The Fourier Transform is defined as such:

[Fourier Transform]

We can now show that the Fourier Transform is equivalent to the Laplace transform, when the following condition is true:

Because the Laplace and Fourier Transforms are so closely related, it does not make much sense to use both transforms for all problems. This book, therefore, will concentrate on the Laplace transform for nearly all subjects, except those problems that deal directly with frequency values. For frequency problems, it makes life much easier to use the Fourier Transform representation.

Like the Laplace Transform, the Fourier Transform has been extensively tabulated. Properties of the Fourier transform, in addition to a table of common transforms is available in the Appendix.

Inverse Fourier Transform

The inverse Fourier Transform is defined as follows:

[Inverse Fourier Transform]

This transform is nearly identical to the Fourier Transform.

Complex Plane

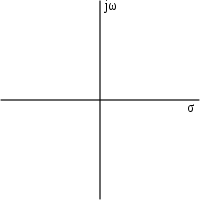

Using the above equivalence, we can show that the Laplace transform is always equal to the Fourier Transform, if the variable s is an imaginary number. However, the Laplace transform is different if s is a real or a complex variable. As such, we generally define s to have both a real part and an imaginary part, as such:

And we can show that s = jω if σ = 0.

Since the variable s can be broken down into 2 independent values, it is frequently of some value to graph the variable s on its own special "S-plane". The S-plane graphs the variable σ on the horizontal axis, and the value of jω on the vertical axis. This axis arrangement is shown at right.

Euler's Formula

There is an important result from calculus that is known as Euler's Formula, or "Euler's Relation". This important formula relates the important values of e, j, π, 1 and 0:

However, this result is derived from the following equation, setting ω to π:

[Euler's Formula]

This formula will be used extensively in some of the chapters of this book, so it is important to become familiar with it now.

MATLAB

The MATLAB symbolic toolbox contains functions to compute the Laplace and Fourier transforms automatically. The function laplace, and the function fourier can be used to calculate the Laplace and Fourier transforms of the input functions, respectively. For instance, the code:

t = sym('t');

fx = 30*t^2 + 20*t;

laplace(fx)

produces the output:

ans = 60/s^3+20/s^2

We will discuss these functions more in The Appendix.

Further reading

- Digital Signal Processing/Continuous-Time Fourier Transform

- Signals and Systems/Aperiodic Signals

- Circuit Theory/Laplace Transform

Transfer Functions

Transfer Functions

A Transfer Function is the ratio of the output of a system to the input of a system, in the Laplace domain considering its initial conditions and equilibrium point to be zero. This assumption is relaxed for systems observing transience. If we have an input function of X(s), and an output function Y(s), we define the transfer function H(s) to be:

[Transfer Function]

Readers who have read the Circuit Theory book will recognize the transfer function as being the impedance, admittance, impedance ratio of a voltage divider or the admittance ratio of a current divider.

Impulse Response

Time domain variables are generally written with lower-case letters. Laplace-Domain, and other transform domain variables are generally written using upper-case letters.

For comparison, we will consider the time-domain equivalent to the above input/output relationship. In the time domain, we generally denote the input to a system as x(t), and the output of the system as y(t). The relationship between the input and the output is denoted as the impulse response, h(t).

We define the impulse response as being the relationship between the system output to its input. We can use the following equation to define the impulse response:

Impulse Function

It would be handy at this point to define precisely what an "impulse" is. The Impulse Function, denoted with δ(t) is a special function defined piece-wise as follows:

[Impulse Function]

The impulse function is also known as the delta function because it's denoted with the Greek lower-case letter δ. The delta function is typically graphed as an arrow towards infinity, as shown below:

It is drawn as an arrow because it is difficult to show a single point at infinity in any other graphing method. Notice how the arrow only exists at location 0, and does not exist for any other time t. The delta function works with regular time shifts just like any other function. For instance, we can graph the function δ(t - N) by shifting the function δ(t) to the right, as such:

An examination of the impulse function will show that it is related to the unit-step function as follows:

and

The impulse function is not defined at point t = 0, but the impulse must always satisfy the following condition, or else it is not a true impulse function:

The response of a system to an impulse input is called the impulse response. Now, to get the Laplace Transform of the impulse function, we take the derivative of the unit step function, which means we multiply the transform of the unit step function by s:

This result can be verified in the transform tables in The Appendix.

Step Response

Similar to the impulse response, the step response of a system is the output of the system when a unit step function is used as the input. The step response is a common analysis tool used to determine certain metrics about a system. Typically, when a new system is designed, the step response of the system is the first characteristic of the system to be analyzed.

Convolution

However, the impulse response cannot be used to find the system output from the system input in the same manner as the transfer function. If we have the system input and the impulse response of the system, we can calculate the system output using the convolution operation as such:

Where " * " (asterisk) denotes the convolution operation. Convolution is a complicated combination of multiplication, integration and time-shifting. We can define the convolution between two functions, a(t) and b(t) as the following:

[Convolution]

(The variable τ (Greek tau) is a dummy variable for integration). This operation can be difficult to perform. Therefore, many people prefer to use the Laplace Transform (or another transform) to convert the convolution operation into a multiplication operation, through the Convolution Theorem.

Time-Invariant System Response

If the system in question is time-invariant, then the general description of the system can be replaced by a convolution integral of the system's impulse response and the system input. We can call this the convolution description of a system, and define it below:

[Convolution Description]

Convolution Theorem

This method of solving for the output of a system is quite tedious, and in fact it can waste a large amount of time if you want to solve a system for a variety of input signals. Luckily, the Laplace transform has a special property, called the Convolution Theorem, that makes the operation of convolution easier:

- Convolution Theorem

- Convolution in the time domain becomes multiplication in the complex Laplace domain. Multiplication in the time domain becomes convolution in the complex Laplace domain.

The Convolution Theorem can be expressed using the following equations:

[Convolution Theorem]

This also serves as a good example of the property of Duality.

Using the Transfer Function

The Transfer Function fully describes a control system. The Order, Type and Frequency response can all be taken from this specific function. Nyquist and Bode plots can be drawn from the open loop Transfer Function. These plots show the stability of the system when the loop is closed. Using the denominator of the transfer function, called the characteristic equation, roots of the system can be derived.

For all these reasons and more, the Transfer function is an important aspect of classical control systems. Let's start out with the definition:

- Transfer Function

- The Transfer function of a system is the relationship of the system's output to its input, represented in the complex Laplace domain.

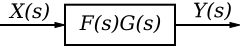

If the complex Laplace variable is s, then we generally denote the transfer function of a system as either G(s) or H(s). If the system input is X(s), and the system output is Y(s), then the transfer function can be defined as such:

If we know the input to a given system, and we have the transfer function of the system, we can solve for the system output by multiplying:

[Transfer Function Description]

Example: Impulse Response

From a Laplace transform table, we know that the Laplace transform of the impulse function, δ(t) is:

So, when we plug this result into our relationship between the input, output, and transfer function, we get:

In other words, the "impulse response" is the output of the system when we input an impulse function.

Example: Step Response

From the Laplace Transform table, we can also see that the transform of the unit step function, u(t) is given by:

Plugging that result into our relation for the transfer function gives us:

And we can see that the step response is simply the impulse response divided by s.

Example: MATLAB Step Response

Use MATLAB to find the step response of the following transfer function:

We can separate out our numerator and denominator polynomials as such:

num = [79 916 1000]; den = [1 30 300 1000 0]; sys = tf(num, den);

% if you are using the System Identification Toolbox instead of the Control System Tooolbox: sys = idtf(num, den);

Now, we can get our step response from the step function, and plot it for time from 1 to 10 seconds:

T = 1:0.001:10; step(sys, T);

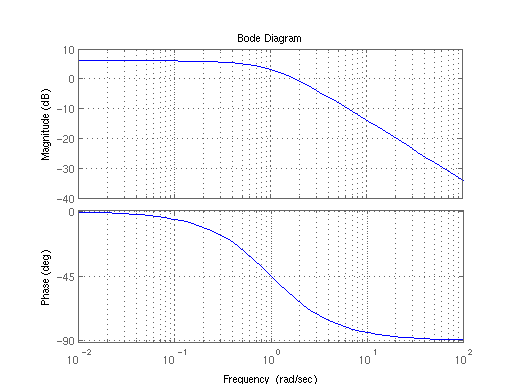

Frequency Response

The Frequency Response is similar to the Transfer function, except that it is the relationship between the system output and input in the complex Fourier Domain, not the Laplace domain. We can obtain the frequency response from the transfer function, by using the following change of variables:

- Frequency Response

- The frequency response of a system is the relationship of the system's output to its input, represented in the Fourier Domain.

Because the frequency response and the transfer function are so closely related, typically only one is ever calculated, and the other is gained by simple variable substitution. However, despite the close relationship between the two representations, they are both useful individually, and are each used for different purposes.

Sampled Data Systems

Ideal Sampler

In this chapter, we are going to introduce the ideal sampler and the Star Transform. First, we need to introduce (or review) the Geometric Series infinite sum. The results of this sum will be very useful in calculating the Star Transform, later.

Consider a sampler device that operates as follows: every T seconds, the sampler reads the current value of the input signal at that exact moment. The sampler then holds that value on the output for T seconds, before taking the next sample. We have a generic input to this system, f(t), and our sampled output will be denoted f*(t). We can then show the following relationship between the two signals:

Note that the value of f * at time t = 1.5 T is the same as at time t = T. This relationship works for any fractional value.

Taking the Laplace Transform of this infinite sequence will yield us with a special result called the Star Transform. The Star Transform is also occasionally called the "Starred Transform" in some texts.

Geometric Series

Before we talk about the Star Transform or even the Z-Transform, it is useful for us to review the mathematical background behind solving infinite series. Specifically, because of the nature of these transforms, we are going to look at methods to solve for the sum of a geometric series.

A geometric series is a sum of values with increasing exponents, as such:

In the equation above, notice that each term in the series has a coefficient value, a. We can optionally factor out this coefficient, if the resulting equation is easier to work with:

Once we have an infinite series in either of these formats, we can conveniently solve for the total sum of this series using the following equation:

Let's say that we start our series off at a number that isn't zero. Let's say for instance that we start our series off at n = 1 or n = 100. Let's see:

We can generalize the sum to this series as follows:

[Geometric Series]

With that result out of the way, now we need to worry about making this series converge. In the above sum, we know that n is approaching infinity (because this is an infinite sum). Therefore, any term that contains the variable n is a matter of worry when we are trying to make this series converge. If we examine the above equation, we see that there is one term in the entire result with an n in it, and from that, we can set a fundamental inequality to govern the geometric series.

To satisfy this equation, we must satisfy the following condition:

[Geometric convergence condition]

Therefore, we come to the final result: The geometric series converges if and only if the value of r is less than one.

The Star Transform

The Star Transform is defined as such:

[Star Transform]

The Star Transform depends on the sampling time T and is different for a single signal depending on the frequency at which the signal is sampled. Since the Star Transform is defined as an infinite series, it is important to note that some inputs to the Star Transform will not converge, and therefore some functions do not have a valid Star Transform. Also, it is important to note that the Star Transform may only be valid under a particular region of convergence. We will cover this topic more when we discuss the Z-transform.

Star ↔ Laplace

Complex Analysis/Residue Theory

The Laplace Transform and the Star Transform are clearly related, because we obtained the Star Transform by using the Laplace Transform on a time-domain signal. However, the method to convert between the two results can be a slightly difficult one. To find the Star Transform of a Laplace function, we must take the residues of the Laplace equation, as such:

This math is advanced for most readers, so we can also use an alternate method, as follows:

Neither one of these methods are particularly easy, however, and therefore we will not discuss the relationship between the Laplace transform and the Star Transform any more than is absolutely necessary in this book. Suffice it to say, however, that the Laplace transform and the Star Transform are related mathematically.

Star + Laplace

In some systems, we may have components that are both continuous and discrete in nature. For instance, if our feedback loop consists of an Analog-To-Digital converter, followed by a computer (for processing), and then a Digital-To-Analog converter. In this case, the computer is acting on a digital signal, but the rest of the system is acting on continuous signals. Star transforms can interact with Laplace transforms in some of the following ways:

Given:

Then:

Given:

Then:

Where is the Star Transform of the product of X(s)H(s).

Convergence of the Star Transform

The Star Transform is defined as being an infinite series, so it is critically important that the series converge (not reach infinity), or else the result will be nonsensical. Since the Star Transform is a geometic series (for many input signals), we can use geometric series analysis to show whether the series converges, and even under what particular conditions the series converges. The restrictions on the star transform that allow it to converge are known as the region of convergence (ROC) of the transform. Typically a transform must be accompanied by the explicit mention of the ROC.

The Z-Transform

Let us say now that we have a discrete data set that is sampled at regular intervals. We can call this set x[n]:

x[n] = [ x[0] x[1] x[2] x[3] x[4] ... ]

we can utilize a special transform, called the Z-transform, to make dealing with this set more easy:

[Z Transform]

the Appendix.

Like the Star Transform the Z Transform is defined as an infinite series and therefore we need to worry about convergence. In fact, there are a number of instances that have identical Z-Transforms, but different regions of convergence (ROC). Therefore, when talking about the Z transform, you must include the ROC, or you are missing valuable information.

Z Transfer Functions

Like the Laplace Transform, in the Z-domain we can use the input-output relationship of the system to define a transfer function.

The transfer function in the Z domain operates exactly the same as the transfer function in the S Domain:

Similarly, the value h[n] which represents the response of the digital system is known as the impulse response of the system. It is important to note, however, that the definition of an "impulse" is different in the analog and digital domains.

Inverse Z Transform

The inverse Z Transform is defined by the following path integral:

[Inverse Z Transform]

Where C is a counterclockwise closed path encircling the origin and entirely in the region of convergence (ROC). The contour or path, C, must encircle all of the poles of X(z).

This math is relatively advanced compared to some other material in this book, and therefore little or no further attention will be paid to solving the inverse Z-Transform in this manner. Z transform pairs are heavily tabulated in reference texts, so many readers can consider that to be the primary method of solving for inverse Z transforms. There are a number of Z-transform pairs available in table form in The Appendix.

Final Value Theorem

Like the Laplace Transform, the Z Transform also has an associated final value theorem:

[Final Value Theorem (Z)]

This equation can be used to find the steady-state response of a system, and also to calculate the steady-state error of the system.

Star ↔ Z

The Z transform is related to the Star transform though the following change of variables:

Notice that in the Z domain, we don't maintain any information on the sampling period, so converting to the Z domain from a Star Transformed signal loses that information. When converting back to the star domain however, the value for T can be re-insterted into the equation, if it is still available.

Also of some importance is the fact that the Z transform is bilinear, while the Star Transform is unilinear. This means that we can only convert between the two transforms if the sampled signal is zero for all values of n < 0.

Because the two transforms are so closely related, it can be said that the Z transform is simply a notational convenience for the Star Transform. With that said, this book could easily use the Star Transform for all problems, and ignore the added burden of Z transform notation entirely. A common example of this is Richard Hamming's book "Numerical Methods for Scientists and Engineers" which uses the Fourier Transform for all problems, considering the Laplace, Star, and Z-Transforms to be merely notational conveniences. However, the Control Systems wikibook is under the impression that the correct utilization of different transforms can make problems more easy to solve, and we will therefore use a multi-transform approach.

Z plane

The lower-case z is the name of the variable, and the upper-case Z is the name of the Transform and the plane.

z is a complex variable with a real part and an imaginary part. In other words, we can define z as such:

Since z can be broken down into two independent components, it often makes sense to graph the variable z on the Z-plane. In the Z-plane, the horizontal axis represents the real part of z, and the vertical axis represents the magnitude of the imaginary part of z.

Notice also that if we define z in terms of the star-transform relation:

we can separate out s into real and imaginary parts:

We can plug this into our equation for z:

Through Euler's formula, we can separate out the complex exponential as such:

If we define two new variables, M and φ:

We can write z in terms of M and φ. Notice that it is Euler's equation:

Which is clearly a polar representation of z, with the magnitude of the polar function (M) based on the real-part of s, and the angle of the polar function (φ) is based on the imaginary part of s.

Region of Convergence

To best teach the region of convergance (ROC) for the Z-transform, we will do a quick example.

We have the following discrete series or a decaying exponential:

Now, we can plug this function into the Z transform equation:

Note that we can remove the unit step function, and change the limits of the sum: