Discrete Mathematics/Print version

| This is the print version of Discrete Mathematics You won't see this message or any elements not part of the book's content when you print or preview this page. |

The current, editable version of this book is available in Wikibooks, the open-content textbooks collection, at

https://en.wikibooks.org/wiki/Discrete_Mathematics

Introductory discrete mathematics

editSet Theory

editIntroduction

editSet Theory starts very simply: it examines whether an object belongs, or does not belong, to a set of objects which has been described in some non-ambiguous way. From this simple beginning, an increasingly complex (and useful!) series of ideas can be developed, which lead to notations and techniques with many varied applications.

Definition: Set

editThe present definition of a set may sound very vague. A set can be defined as an unordered collection of entities that are related because they obey a certain rule.

'Entities' may be anything, literally: numbers, people, shapes, cities, bits of text, ... etc

The key fact about the 'rule' they all obey is that it must be well-defined. In other words, it must describe clearly what the entities obey. If the entities we're talking about are words, for example, a well-defined rule is:

X is English

A rule which is not well-defined (and therefore couldn't be used to define a set) might be:

X is hard to spell

- where X is any word

Elements

editAn entity that belongs to a given set is called an element of that set. For example:

Henry VIII is an element of the set of Kings of England. : kings of England {Henry VIII}

Set Notation

edit- To list the elements of a set, we enclose them in curly brackets, separated by commas. For example:

- The elements of a set may also be described verbally:

- The set builder notation may be used to describe sets that are too tedious to list explicitly. To denote any particular set, we use the letter :

- or equivalently

- The symbols and denote the inclusion and exclusion of element, respectively:

- Sets can contain an infinite number of elements, such as the set of prime numbers. Ellipses are used to denote the infinite continuation of a pattern:

Note that the use of ellipses may cause ambiguities, the set above may be taken as the set of integers individible by 4, for example.

- Sets will usually be denoted using upper case letters: , , ...

- Elements will usually be denoted using lower case letters: , , ...

Special Sets

editThe universal set

editThe set of all the entities in the current context is called the universal set, or simply the universe. It is denoted by .

The context may be a homework exercise, for example, where the Universal set is limited to the particular entities under its consideration. Also, it may be any arbitrary problem, where we clearly know where it is applied.

The empty set

editThe set containing no elements at all is called the null set, or empty set. It is denoted by a pair of empty braces: or by the symbol .

It may seem odd to define a set that contains no elements. Bear in mind, however, that one may be looking for solutions to a problem where it isn't clear at the outset whether or not such solutions even exist. If it turns out that there isn't a solution, then the set of solutions is empty.

For example:

- If then .

- If then .

Operations on the empty set

editOperations performed on the empty set (as a set of things to be operated upon) can also be confusing. (Such operations are nullary operations.) For example, the sum of the elements of the empty set is zero, but the product of the elements of the empty set is one (see empty product). This may seem odd, since there are no elements of the empty set, so how could it matter whether they are added or multiplied (since “they” do not exist)? Ultimately, the results of these operations say more about the operation in question than about the empty set. For instance, notice that zero is the identity element for addition, and one is the identity element for multiplication.

Special numerical sets

editSeveral sets are used so often, they are given special symbols.

Natural numbers

editThe 'counting' numbers (or whole numbers) starting at 1, are called the natural numbers. This set is sometimes denoted by N. So N = {1, 2, 3, ...}

Note that, when we write this set by hand, we can't write in bold type so we write an N in blackboard bold font:

Integers

editAll whole numbers, positive, negative and zero form the set of integers. It is sometimes denoted by Z. So Z = {..., -3, -2, -1, 0, 1, 2, 3, ...}

In blackboard bold, it looks like this:

Real numbers

editIf we expand the set of integers to include all decimal numbers, we form the set of real numbers. The set of reals is sometimes denoted by R.

A real number may have a finite number of digits after the decimal point (e.g. 3.625), or an infinite number of decimal digits. In the case of an infinite number of digits, these digits may:

- recur; e.g. 8.127127127...

- ... or they may not recur; e.g. 3.141592653...

In blackboard bold:

Rational numbers

editThose real numbers whose decimal digits are finite in number, or which recur, are called rational numbers. The set of rationals is sometimes denoted by the letter Q.

A rational number can always be written as exact fraction p/q; where p and q are integers. If q equals 1, the fraction is just the integer p. Note that q may NOT equal zero as the value is then undefined.

- For example: 0.5, -17, 2/17, 82.01, 3.282828... are all rational numbers.

In blackboard bold:

Irrational numbers

editIf a number can't be represented exactly by a fraction p/q, it is said to be irrational.

- Examples include: √2, √3, π.

Set Theory Exercise 1

editClick the link for Set Theory Exercise 1

Relationships between Sets

editWe’ll now look at various ways in which sets may be related to one another.

Equality

editTwo sets and are said to be equal if and only if they have exactly the same elements. In this case, we simply write:

Note two further facts about equal sets:

- The order in which elements are listed does not matter.

- If an element is listed more than once, any repeat occurrences are ignored.

So, for example, the following sets are all equal:

(You may wonder why one would ever come to write a set like . You may recall that when we defined the empty set we noted that there may be no solutions to a particular problem - hence the need for an empty set. Well, here we may be trying several different approaches to solving a problem, some of which in fact lead us to the same solution. When we come to consider the distinct solutions, however, any such repetitions would be ignored.)

Subsets

editIf all the elements of a set are also elements of a set , then we say that is a subset of and we write:

For example:

In the examples below:

If and , then If and , then If and , then

Notice that does not imply that must necessarily contain extra elements that are not in ; the two sets could be equal – as indeed and are above. However, if, in addition, does contain at least one element that isn’t in , then we say that is a proper subset of . In such a case we would write:

In the examples above:

contains ... -4, -2, 0, 2, 4, 6, 8, 10, 12, 14, ... , so

contains $, ;, &, ..., so

But and are just different ways of saying the same thing, so

The use of and ; is clearly analogous to the use of < and ≤ when comparing two numbers.

Notice also that every set is a subset of the universal set, and the empty set is a subset of every set.

(You might be curious about this last statement: how can the empty set be a subset of anything, when it doesn’t contain any elements? The point here is that for every set , the empty set doesn’t contain any elements that aren't in . So for all sets .)

Finally, note that if and must contain exactly the same elements, and are therefore equal. In other words:

Disjoint

editTwo sets are said to be disjoint if they have no elements in common. For example:

If and , then and are disjoint sets

Venn Diagrams

editA Venn diagram can be a useful way of illustrating relationships between sets.

In a Venn diagram:

- The universal set is represented by a rectangle. Points inside the rectangle represent elements that are in the universal set; points outside represent things not in the universal set. You can think of this rectangle, then, as a 'fence' keeping unwanted things out - and concentrating our attention on the things we're talking about.

- Other sets are represented by loops, usually oval or circular in shape, drawn inside the rectangle. Again, points inside a given loop represent elements in the set it represents; points outside represent things not in the set.

On the left, the sets A and B are disjoint, because the loops don't overlap.

On the right A is a subset of B, because the loop representing set A is entirely enclosed by loop B.

Venn diagrams: Worked Examples

edit

Example 1

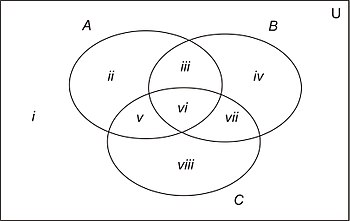

Fig. 3 represents a Venn diagram showing two sets A and B, in the general case where nothing is known about any relationships between the sets.

Note that the rectangle representing the universal set is divided into four regions, labelled i, ii, iii and iv.

What can be said about the sets A and B if it turns out that:

- (a) region ii is empty?

- (b) region iii is empty?

(a) If region ii is empty, then A contains no elements that are not in B. So A is a subset of B, and the diagram should be re-drawn like Fig 2 above.

(b) If region iii is empty, then A and B have no elements in common and are therefore disjoint. The diagram should then be re-drawn like Fig 1 above.

Example 2

- (a) Draw a Venn diagram to represent three sets A, B and C, in the general case where nothing is known about possible relationships between the sets.

- (b) Into how many regions is the rectangle representing U divided now?

- (c) Discuss the relationships between the sets A, B and C, when various combinations of these regions are empty.

(a) The diagram in Fig. 4 shows the general case of three sets where nothing is known about any possible relationships between them.

(b) The rectangle representing U is now divided into 8 regions, indicated by the Roman numerals i to viii.

(c) Various combinations of empty regions are possible. In each case, the Venn diagram can be re-drawn so that empty regions are no longer included. For example:

- If region ii is empty, the loop representing A should be made smaller, and moved inside B and C to eliminate region ii.

- If regions ii, iii and iv are empty, make A and B smaller, and move them so that they are both inside C (thus eliminating all three of these regions), but do so in such a way that they still overlap each other (thus retaining region vi).

- If regions iii and vi are empty, 'pull apart' loops A and B to eliminate these regions, but keep each loop overlapping loop C.

- ...and so on. Drawing Venn diagrams for each of the above examples is left as an exercise for the reader.

Example 3

The following sets are defined:

- U = {1, 2, 3, …, 10}

- A = {2, 3, 7, 8, 9}

- B = {2, 8}

- C = {4, 6, 7, 10}

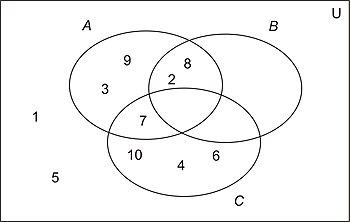

Using the two-stage technique described below, draw a Venn diagram to represent these sets, marking all the elements in the appropriate regions.

The technique is as follows:

- Draw a 'general' 3-set Venn diagram, like the one in Example 2.

- Go through the elements of the universal set one at a time, once only, entering each one into the appropriate region of the diagram.

- Re-draw the diagram, if necessary, moving loops inside one another or apart to eliminate any empty regions.

Don't begin by entering the elements of set A, then set B, then C – you'll risk missing elements out or including them twice!

Solution

After drawing the three empty loops in a diagram looking like Fig. 4 (but without the Roman numerals!), go through each of the ten elements in U - the numbers 1 to 10 - asking each one three questions; like this:

First element: 1

- Are you in A? No

- Are you in B? No

- Are you in C? No

A 'no' to all three questions means that the number 1 is outside all three loops. So write it in the appropriate region (region number i in Fig. 4).

Second element: 2

- Are you in A? Yes

- Are you in B? Yes

- Are you in C? No

Yes, yes, no: so the number 2 is inside A and B but outside C. Goes in region iii then.

...and so on, with elements 3 to 10.

The resulting diagram looks like Fig. 5.

The final stage is to examine the diagram for empty regions - in this case the regions we called iv, vi and vii in Fig. 4 - and then re-draw the diagram to eliminate these regions. When we've done so, we shall clearly see the relationships between the three sets.

So we need to:

- pull B and C apart, since they don't have any elements in common.

- push B inside A since it doesn't have any elements outside A.

The finished result is shown in Fig. 6.

The regions in a Venn Diagram and Truth Tables

editPerhaps you've realized that adding an additional set to a Venn diagram doubles the number of regions into which the rectangle representing the universal set is divided. This gives us a very simple pattern, as follows:

- With one set loop, there will be just two regions: the inside of the loop and its outside.

- With two set loops, there'll be four regions.

- With three loops, there'll be eight regions.

- ...and so on.

It's not hard to see why this should be so. Each new loop we add to the diagram divides each existing region into two, thus doubling the number of regions altogether.

| In A? | In B? | In C? |

|---|---|---|

| Y | Y | Y |

| Y | Y | N |

| Y | N | Y |

| Y | N | N |

| N | Y | Y |

| N | Y | N |

| N | N | Y |

| N | N | N |

But there's another way of looking at this, and it's this. In the solution to Example 3 above, we asked three questions of each element: Are you in A? Are you in B? and Are you in C? Now there are obviously two possible answers to each of these questions: yes and no. When we combine the answers to three questions like this, one after the other, there are then 23 = 8 possible sets of answers altogether. Each of these eight possible combinations of answers corresponds to a different region on the Venn diagram.

The complete set of answers resembles very closely a Truth Table - an important concept in Logic, which deals with statements which may be true or false. The table on the right shows the eight possible combinations of answers for 3 sets A, B and C.

You'll find it helpful to study the patterns of Y's and N's in each column.

- As you read down column C, the letter changes on every row: Y, N, Y, N, Y, N, Y, N

- Reading down column B, the letters change on every other row: Y, Y, N, N, Y, Y, N, N

- Reading down column A, the letters change every four rows: Y, Y, Y, Y, N, N, N, N

Set Theory Exercise 2

editClick link for Set Theory Exercise 2

Operations on Sets

editJust as we can combine two numbers to form a third number, with operations like 'add', 'subtract', 'multiply' and 'divide', so we can combine two sets to form a third set in various ways. We'll begin by looking again at the Venn diagram which shows two sets A and B in a general position, where we don't have any information about how they may be related.

| In A? | In B? | Region |

|---|---|---|

| Y | Y | iii |

| Y | N | ii |

| N | Y | iv |

| N | N | i |

The first two columns in the table on the right show the four sets of possible answers to the questions Are you in A? and Are you in B? for two sets A and B; the Roman numerals in the third column show the corresponding region in the Venn diagram in Fig. 7.

Intersection

editRegion iii, where the two loops overlap (the region corresponding to 'Y' followed by 'Y'), is called the intersection of the sets A and B. It is denoted by A ∩ B. So we can define intersection as follows:

- The intersection of two sets A and B, written A ∩ B, is the set of elements that are in A and in B.

(Note that in symbolic logic, a similar symbol, , is used to connect two logical propositions with the AND operator.)

For example, if A = {1, 2, 3, 4} and B = {2, 4, 6, 8}, then A ∩ B = {2, 4}.

We can say, then, that we have combined two sets to form a third set using the operation of intersection.

Union

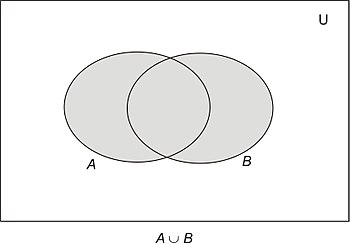

editIn a similar way we can define the union of two sets as follows:

- The union of two sets A and B, written A ∪ B, is the set of elements that are in A or in B (or both).

The union, then, is represented by regions ii, iii and iv in Fig. 7.

(Again, in logic a similar symbol, , is used to connect two propositions with the OR operator.)

- So, for example, {1, 2, 3, 4} ∪ {2, 4, 6, 8} = {1, 2, 3, 4, 6, 8}.

You'll see, then, that in order to get into the intersection, an element must answer 'Yes' to both questions, whereas to get into the union, either answer may be 'Yes'.

The ∪ symbol looks like the first letter of 'Union' and like a cup that will hold a lot of items. The ∩ symbol looks like a spilled cup that won't hold a lot of items, or possibly the letter 'n', for i'n'tersection. Take care not to confuse the two.

Difference

edit- The difference of two sets A and B (also known as the set-theoretic difference of A and B, or the relative complement of B in A) is the set of elements that are in A but not in B.

This is written A - B, or sometimes A \ B.

The elements in the difference, then, are the ones that answer 'Yes' to the first question Are you in A?, but 'No' to the second Are you in B?. This combination of answers is on row 2 of the above table, and corresponds to region ii in Fig.7.

- For example, if A = {1, 2, 3, 4} and B = {2, 4, 6, 8}, then A - B = {1, 3}.

Complement

editSo far, we have considered operations in which two sets combine to form a third: binary operations. Now we look at a unary operation - one that involves just one set.

- The set of elements that are not in a set A is called the complement of A. It is written A′ (or sometimes AC, or ).

Clearly, this is the set of elements that answer 'No' to the question Are you in A?.

- For example, if U = N and A = {odd numbers}, then A′ = {even numbers}.

Notice the spelling of the word complement: its literal meaning is 'a complementary item or items'; in other words, 'that which completes'. So if we already have the elements of A, the complement of A is the set that completes the universal set.

Properties of set operations

edit1. Commutative

edit2. Associative

edit3. Distributive

edit4. Special properties of complements

edit5. De Morgan's Law

edit- .

Summary

edit

Cardinality

editFinally, in this section on Set Operations we look at an operation on a set that yields not another set, but an integer.

- The cardinality of a finite set A, written | A | (sometimes #(A) or n(A)), is the number of (distinct) elements in A. So, for example:

- If A = {lower case letters of the alphabet}, | A | = 26.

Generalized set operations

editIf we want to denote the intersection or union of n sets, A1, A2, ..., An (where we may not know the value of n) then the following generalized set notation may be useful:

- A1 ∩ A2 ∩ ... ∩ An = Ai

- A1 ∪ A2 ∪ ... ∪ An = Ai

In the symbol Ai, then, i is a variable that takes values from 1 to n, to indicate the repeated intersection of all the sets A1 to An.

Set Theory Exercise 3

editClick link for Set Theory Exercise 3

Set Theory Page 2

editPower Sets

editThe power set of a set A is the set of all its subsets (including, of course, itself and the empty set). It is denoted by P(A).

Using set comprehension notation, P(A) can be defined as

- P(A) = { Q | Q ⊆ A }

Example 4

- Write down the power sets of A if:

(a) A = {1, 2, 3}

(b) A = {1, 2}

(c) A = {1}

(d) A = ø

Solution

(a) P(A) = { {1, 2, 3}, {2, 3}, {1, 3}, {1, 2}, {1}, {2}, {3}, ø }

(b) P(A) = { {1, 2}, {1}, {2}, ø }

(c) P(A) = { {1}, ø }

(d) P(A) = { ø }

Cardinality of a Power Set

editLook at the cardinality of the four sets in Example 4, and the cardinality of their corresponding power sets. They are:

| | A | | | P(A) | | |

| (a) | 3 | 8 |

| (b) | 2 | 4 |

| (c) | 1 | 2 |

| (d) | 0 | 1 |

Clearly, there's a simple rule at work here: expressed as powers of 2, the cardinalities of the power sets are 23, 22, 21 and 20.

It looks as though we have found a rule that if | A | = k, then | P(A) | = 2k. But can we see why?

Well, the elements of the power set of A are all the possible subsets of A. Any one of these subsets can be formed in the following way:

- Choose any element of A. We may decide to include this in our subset, or we may omit it. There are therefore 2 ways of dealing with this first element of A.

- Now choose a second element of A. As before, we may include it, or omit it from our subset: again a choice of 2 ways of dealing with this element.

- ...and so on through all k elements of A.

Now the fundamental principle of combinatorics tells us that if we can do each of k things in 2 ways, then the total number of ways of doing them all, one after the other, is 2k.

Each one of these 2k combinations of decisions - including elements or omitting them - gives us a different subset of A. There are therefore 2k different subsets altogether.

So if | A | = k, then | P(A) | = 2k.

The Foundational Rules of Set Theory

editThe laws listed below can be described as the Foundational Rules of Set Theory. We derive them by going back to the definitions of intersection, union, universal set and empty set, and by considering whether a given element is in, or not in, one or more sets.

The Idempotent Laws

As an example, we'll consider the ′I heard you the first time′ Laws – more correctly called the Idempotent Laws - which say that:

- A ∩ A = A and A ∪ A = A

This law might be familiar to you if you've studied logic. The above relationship is comparable to the tautology.

These look pretty obvious, don't they? A simple explanation for the intersection part of these laws goes something like this:

The intersection of two sets A and B is defined as just those elements that are in A and in B. If we replace B by A in this definition we get that the intersection of A and A is the set comprising just those elements that are in A and in A. Obviously, we don't need to say this twice (I heard you the first time), so we can simply say that the intersection of A and A is the set of elements in A. In other words:

- A ∩ A = A

We can derive the explanation for A ∪ A = A in a similar way.

De Morgan's Laws

There are two laws, called De Morgan's Laws, which tell us how to remove brackets, when there's a complement symbol - ′ - outside. One of these laws looks like this:

- (A ∪ B) ′ = A ′ ∩ B ′

(If you've done Exercise 3, question 4, you may have spotted this law already from the Venn Diagrams.)

Look closely at how this Law works. The complement symbol after the bracket affects all three symbols inside the bracket when the brackets are removed:

- A becomes A ′

- B becomes B ′

- and ∪ becomes ∩.

To prove this law, note first of all that when we defined a subset we said that if

- A ⊆ B and B ⊆ A, then A = B

So we prove:

- (i) (A ∪ B) ′ ⊆ A ′ ∩ B ′

and then the other way round:

- (ii) A ′ ∩ B ′ ⊆ (A ∪ B) ′

The proof of (i) goes like this:

Let's pick an element at random x ∈ (A ∪ B) ′. We don't know anything about x; it could be a number, a function, or indeed an elephant. All we do know about x, is that

- x ∈ (A ∪ B) ′

So

- x ∉ (A ∪ B)

because that's what complement means.

This means that x answers No to both questions Are you in A? and Are you in B? (otherwise it would be in the union of A and B). Therefore

- x ∉ A and x ∉ B

Applying complements again we get

- x ∈ A ′ and x ∈ B ′

Finally, if something is in two sets, it must be in their intersection, so

- x ∈ A ′ ∩ B ′

So, any element we pick at random from (A ∪ B) ′ is definitely in A ′ ∩ B ′. So by definition

- (A ∪ B) ′ ⊆ A ′ ∩ B ′

The proof of (ii) is similar:

First, we pick an element at random from the first set, x ∈ A ′ ∩ B ′

Using what we know about intersections, that means

- x ∈ A ′ and x ∈ B ′

So, using what we know about complements,

- x ∉ A and x ∉ B

And if something is in neither A nor B, it can't be in their union, so

- x ∉ A ∪ B

So, finally:

- x ∈ (A ∪ B) ′

So:

- A ′ ∩ B ′ ⊆ (A ∪ B) ′

We've now proved (i) and (ii), and therefore:

- (A ∪ B) ′ = A ′ ∩ B ′

This gives you a taste for what's behind these laws. So here they all are.

The Laws of Sets

editCommutative Laws

- A ∩ B = B ∩ A

- A ∪ B = B ∪ A

Associative Laws

- (A ∩ B) ∩ C = A ∩ (B ∩ C)

- (A ∪ B) ∪ C = A ∪ (B ∪ C)

Distributive Laws

- A ∩ (B ∪ C) = (A ∩ B) ∪ (A ∩ C)

- A ∪ (B ∩ C) = (A ∪ B) ∩ (A ∪ C)

Idempotent Laws

- A ∩ A = A

- A ∪ A = A

Identity Laws

- A ∪ ø = A

- A ∩ U = A

- A ∪ U = U

- A ∩ ø = ø

Involution Law

(A ′) ′ = A

Complement Laws

- A ∪ A' = U

- A ∩ A' = ø

- U ′ = ø

- ø ′ = U

De Morgan’s Laws

- (A ∩ B) ′ = A ′ ∪ B ′

- (A ∪ B) ′ = A ′ ∩ B ′

Duality and Boolean Algebra

editYou may notice that the above Laws of Sets occur in pairs: if in any given law, you exchange ∪ for ∩ and vice versa (and, if necessary, swap U and ø) you get another of the laws. The 'partner laws' in each pair are called duals, each law being the dual of the other.

- For example, each of De Morgan's Laws is the dual of the other.

- The first complement law, A ∪ A ′ = U, is the dual of the second: A ∩ A ′ = ø.

- ... and so on.

This is called the Principle of Duality. In practical terms, it means that you only need to remember half of this table!

This set of laws constitutes the axioms of a Boolean Algebra. See Boolean Algebra for more.

Proofs using the Laws of Sets

editWe may use these laws - and only these laws - to determine whether other statements about the relationships between sets are true or false. Venn diagrams may be helpful in suggesting such relationships, but only a proof based on these laws will be accepted by mathematicians as rigorous.

Example 5

Using the Laws of Sets, prove that the set (A ∪ B) ∩ (A ′ ∩ B) ′ is simply the same as the set A itself. State carefully which Law you are using at each stage.

Before we begin the proof, a few do's and don't’s:

| Do start with the single expression (A ∪ B) ∩ (A ′ ∩ B) ′, and aim to change it into simply A. | Don’t begin by writing down the whole equation (A ∪ B) ∩ (A ′ ∩ B) ′ = A – that’s what we must end up with. |

| Do change just one part of the expression at a time, using just one of the set laws at a time. | Don't miss steps out, and change two things at once. |

| Do keep the equals signs underneath one another. | Don't allow your work to become untidy and poorly laid out. |

| Do state which law you have used at each stage. | Don't take even the simplest step for granted. |

Solution

| Law Used | ||

| (A ∪ B) ∩ (A ′ ∩ B) ′ | = (A ∪ B) ∩ ((A ′) ′ ∪ B ′) | De Morgan’s |

| = (A ∪ B) ∩ (A ∪ B ′) | Involution | |

| = A ∪ (B ∩ B ′) | Distributive | |

| = A ∪ ø | Complement | |

| = A | Identity |

We have now proved that (A ∪ B) ∩ (A ′ ∩ B) ′ = A whatever the sets A and B contain. A statement like this – one that is true for all values of A and B – is sometimes called an identity.

Hints on Proofs

There are no foolproof methods with these proofs – practice is the best guide. But here are a few general hints.

- Start with the more complicated side of the equation, aiming to simplify it into the other side.

- Look for places where the Distributive Law will result in a simplification (like factorising in 'ordinary' algebra - see the third line in Example 5 above).

- You’ll probably use De Morgan’s Law to get rid of a ' symbol from outside a bracket.

- Sometimes you may need to 'complicate' an expression before you can simplify it, as the next example shows.

Example 6

Use the Laws of Sets to prove that A ∪ (A ∩ B) = A.

Looks easy, doesn’t it? But you can go round in circles with this one. (Try it first before reading the solution below, if you like.) The key is to 'complicate' it a bit first, by writing the first A as A ∩ U (using one of the Identity Laws).

Solution

| Law Used | ||

| A ∪ (A ∩ B) | = (A ∩ U) ∪ (A ∩ B) | Identity |

| = A ∩ (U ∪ B) | Distributive | |

| = A ∩ (B ∪ U) | Commutative | |

| = A ∩ U | Identity | |

| = A | Identity |

Set Theory Exercise 4

editClick link for Set Theory Exercise 4.

Cartesian Products

editOrdered pair

editTo introduce this topic, we look first at a couple of examples that use the principle of combinatorics that we noted earlier (see Cardinality); namely, that if an event R can occur in r ways and a second event S can then occur in s ways, then the total number of ways that the two events, R followed by S, can occur is r × s. This is sometimes called the r-s Principle.

Example 7

| MENU |

| Main Course |

| Poached Halibut |

| Roast Lamb |

| Vegetable Curry |

| Lasagne |

| Dessert |

| Fresh Fruit Salad |

| Apple Pie |

| Gateau |

How many different meals – Main Course followed by Dessert - can be chosen from the above menu?

Solution

Since we may choose the main course in four ways, and then the dessert in three ways to form a different combination each time, the answer, using the r-s Principle, is that there are 4 × 3 = 12 different meals.

Example 8

You're getting ready to go out. You have 5 different (clean!) pairs of underpants and two pairs of jeans to choose from. In how many ways can you choose to put on a pair of pants and some jeans?

Solution

Using the r-s Principle, there are 5 × 2 = 10 ways in which the two can be chosen, one after the other.

In each of the two situations above, we have examples of ordered pairs. As the name says, an ordered pair is simply a pair of 'things' arranged in a certain order. Often the order in which the things are chosen is arbitrary – we simply have to decide on a convention, and then stick to it; sometimes the order really only works one way round.

In the case of the meals, most people choose to eat their main course first, and then dessert. In the clothes we wear, we put on our underpants before our jeans. You are perfectly free to fly in the face of convention and have your dessert before the main course - or to wear your underwear on top of your trousers - but you'll end up with different sets of ordered pairs if you do. And, of course, you'll usually have a lot of explaining to do!

The two 'things' that make up an ordered pair are written in round brackets, and separated by a comma; like this:

- (Lasagne, Gateau)

You will have met ordered pairs before if you've done coordinate geometry. For example, (2, 3) represents the point 2 units along the x-axis and 3 units up the y-axis. Here again, there's a convention at work in the order of the numbers: we use alphabetical order and put the x-coordinate before the y-coordinate. (Again, you could choose to do your coordinate geometry the other way round, and put y before x, but once again, you'd have a lot of explaining to do!)

Using set notation, then, we could describe the situation in Example 7 like this:

- M = {main courses}, D = {desserts}, C = {complete meals}.

Then C could be written as:

- C = { (m, d) | m ∈ M and d ∈ D }.

C is called the set product or Cartesian product of M and D, and we write:

- C = M × D

(read 'C equals M cross D')

Suppose that the menu in Example 7 is expanded to include a starter, which is either soup or fruit juice. How many complete meals can be chosen now?

Well, we can extend the r-s Principle to include this third choice, and get 2 × 4 × 3 = 24 possible meals.

If S = {soup, fruit juice}, then we can write:

- C = S × M × D

An element of this set is an ordered triple: (starter, main course, dessert). Notice, then, that the order in which the individual items occur in the triple is the same as the order of the sets from which they are chosen: S × M × D does not give us the same set of ordered triples as M × D × S.

Ordered n-tuples

editIn general, if we have n sets: A1, A2, ..., An, then their Cartesian product is defined by:

- A1 × A2 × ... × An = { (a1, a2, ..., an) | a1 ∈ A1, a2 ∈ A2, ..., an ∈ An) }

and (a1, a2, ..., an) is called an ordered n-tuple.

Notation

A1 × A2 × ... × An is sometimes written:

- Ai

The Cartesian Plane

edit

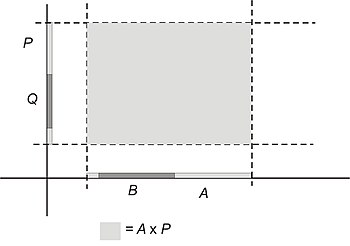

You probably know already the way in which a point in a plane may be represented by an ordered pair. The diagram in Fig. 8 illustrates a Cartesian Plane, showing the point represented by the ordered pair (5, 2).

The lines are called axes: the x-axis and the y-axis. The point where they meet is called the origin. The point (5, 2) is then located as follows: start at the origin; move 5 units in the direction of the x-axis, and then 2 units in the direction of the y-axis.

Using set notation:

If X = {numbers on the x-axis} and Y = {numbers on the y-axis}, then:

- (5, 2) ∈ X × Y

and, indeed, if X = {all real numbers}, and Y = {all real numbers} then X × Y as a whole represents all the points in the x-y plane.

(This is why you will sometimes see the x-y plane referred to as R2, where R = {real numbers}.)

Example 9

It is believed that, if A, B, P and Q are sets where B ⊂ A and Q ⊂ P, then:

- B × Q ⊂ A × P

Use a carefully shaded and labelled Cartesian diagram to investigate this proposition.

Solution

Bearing in mind what we said above about the ordered pairs in X × Y corresponding to points in the x-y plane, if we want to represent a Cartesian product like A × P on a diagram, we shall need to represent the individual sets A and P as sets of points on the x- and y- axes respectively.

The region representing the Cartesian product A × P is then represented by the points whose x- and y-coordinates lie within these sets A and P. Like this:

The same can also be said about B and Q: B must lie on the x-axis, and Q on the y-axis.

In addition, since B ⊂ A, then we must represent B as a set chosen from within the elements of A. Similarly, since Q ⊂ P, the elements of Q must lie within the elements of P.

When we add these components on to the diagram it looks like this:

Finally, when we represent the set B × Q as a rectangle whose limits are determined by the limits of B and Q, it is clear that this rectangle will lie within the rectangle representing A × P:

So, the proposition B × Q ⊂ A × P appears to be true.

Set Theory Exercise 5

editClick link for Set Theory Exercise 5.

Functions and relations

editIntroduction

editThis article examines the concepts of a function and a relation.

A relation is any association or link between elements of one set, called the domain or (less formally) the set of inputs, and another set, called the range or set of outputs. Some people mistakenly refer to the range as the codomain(range), but as we will see, that really means the set of all possible outputs—even values that the relation does not actually use. (Beware: some authors do not use the term codomain(range), and use the term range instead for this purpose. Those authors use the term image for what we are calling range. So while it is a mistake to refer to the range or image as the codomain(range), it is not necessarily a mistake to refer to codomain as range.)

For example, if the domain is a set Fruits = {apples, oranges, bananas} and the codomain(range) is a set Flavors = {sweetness, tartness, bitterness}, the flavors of these fruits form a relation: we might say that apples are related to (or associated with) both sweetness and tartness, while oranges are related to tartness only and bananas to sweetness only. (We might disagree somewhat, but that is irrelevant to the topic of this book.) Notice that "bitterness", although it is one of the possible Flavors (codomain)(range), is not really used for any of these relationships; so it is not part of the range (or image) {sweetness, tartness}.

Another way of looking at this is to say that a relation is a subset of ordered pairs drawn from the set of all possible ordered pairs (of elements of two other sets, which we normally refer to as the Cartesian product of those sets). Formally, R is a relation if

for the domain X and codomain(range) Y. The inverse relation of R, which is written as R-1, is what we get when we interchange the X and Y values:

Using the example above, we can write the relation in set notation: {(apples, sweetness), (apples, tartness), (oranges, tartness), (bananas, sweetness)}. The inverse relation, which we could describe as "fruits of a given flavor", is {(sweetness, apples), (sweetness, bananas), (tartness, apples), (tartness, oranges)}. (Here, as elsewhere, the order of elements in a set has no significance.)

One important kind of relation is the function. A function is a relation that has exactly one output for every possible input in the domain. (The domain does not necessarily have to include all possible objects of a given type. In fact, we sometimes intentionally use a restricted domain in order to satisfy some desirable property.) The relations discussed above (flavors of fruits and fruits of a given flavor) are not functions: the first has two possible outputs for the input "apples" (sweetness and tartness); and the second has two outputs for both "sweetness" (apples and bananas) and "tartness" (apples and oranges).

The main reason for not allowing multiple outputs with the same input is that it lets us apply the same function to different forms of the same thing without changing their equivalence. That is, if f is a function with a (or b) in its domain, then a = b implies that f(a) = f(b). For example, z - 3 = 5 implies that z = 8 because f(x) = x + 3 is a function unambiguously defined for all numbers x.

The converse, that f(a) = f(b) implies a = b, is not always true. When it is, there is never more than one input x for a certain output y = f(x). This is the same as the definition of function, but with the roles of X and Y interchanged; so it means the inverse relation f-1 must also be a function. In general—regardless of whether or not the original relation was a function—the inverse relation will sometimes be a function, and sometimes not.

When f and f-1 are both functions, they are called one-to-one, injective, or invertible functions. This is one of two very important properties a function f might (or might not) have; the other property is called onto or surjective, which means, for any y ∈ Y (in the codomain), there is some x ∈ X (in the domain) such that f(x) = y. In other words, a surjective function f maps onto every possible output at least once.

A function can be neither one-to-one nor onto, both one-to-one and onto (in which case it is also called bijective or a one-to-one correspondence), or just one and not the other. (As an example which is neither, consider f = {(0,2), (1,2)}. It is a function, since there is only one y value for each x value; but there is more than one input x for the output y = 2; and it clearly does not "map onto" all integers.)

Relations

editIn the above section dealing with functions and their properties, we noted the important property that all functions must have, namely that if a function does map a value from its domain to its co-domain, it must map this value to only one value in the co-domain.

Writing in set notation, if a is some fixed value:

- |{f(x)|x=a}| ∈ {0, 1}

The literal reading of this statement is: the cardinality (number of elements) of the set of all values f(x), such that x=a for some fixed value a, is an element of the set {0, 1}. In other words, the number of outputs that a function f may have at any fixed input a is either zero (in which case it is undefined at that input) or one (in which case the output is unique).

However, when we consider the relation, we relax this constriction, and so a relation may map one value to more than one other value. In general, a relation is any subset of the Cartesian product of its domain and co-domain.

All functions, then, can be considered as relations also.

Notations

editWhen we have the property that one value is related to another, we call this relation a binary relation and we write it as

- x R y

where R is the relation.

For arrow diagrams and set notations, remember for relations we do not have the restriction that functions do and we can draw an arrow to represent the mappings, and for a set diagram, we need only write all the ordered pairs that the relation does take: again, by example

- f = {(0,0),(1,1),(-1,1),(2,2),(-2,2)}

is a relation and not a function, since both 1 and 2 are mapped to two values, (1 and -1, and 2 and -2 respectively) example let A=2,3,5;B=4,6,9 then A*B=(2,4),(2,6),(2,9),(3,4),(3,6),(3,9),(5,4),(5,6),(5,9) Define a relation R=(2,4),(2,6),(3,6),(3,9) add functions and problems to one another.

Some simple examples

editLet us examine some simple relations.

Say f is defined by

- {(0,0),(1,1),(2,2),(3,3),(1,2),(2,3),(3,1),(2,1),(3,2),(1,3)}

This is a relation (not a function) since we can observe that 1 maps to 2 and 3, for instance.

Less-than, "<", is a relation also. Many numbers can be less than some other fixed number, so it cannot be a function.

Properties

editWhen we are looking at relations, we can observe some special properties different relations can have.

Reflexive

editA relation is reflexive if, we observe that for all values a:

- a R a

In other words, all values are related to themselves.

The relation of equality, "=" is reflexive. Observe that for, say, all numbers a (the domain is R):

- a = a

so "=" is reflexive.

In a reflexive relation, we have arrows for all values in the domain pointing back to themselves:

Note that ≤ is also reflexive (a ≤ a for any a in R). On the other hand, the relation < is not (a < a is false for any a in R).

Symmetric

editA relation is symmetric if, we observe that for all values of a and b:

- a R b implies b R a

The relation of equality again is symmetric. If x=y, we can also write that y=x also.

In a symmetric relation, for each arrow we have also an opposite arrow, i.e. there is either no arrow between x and y, or an arrow points from x to y and an arrow back from y to x:

Neither ≤ nor < is symmetric (2 ≤ 3 and 2 < 3 but neither 3 ≤ 2 nor 3 < 2 is true).

Transitive

editA relation is transitive if for all values a, b, c:

- a R b and b R c implies a R c

The relation greater-than ">" is transitive. If x > y, and y > z, then it is true that x > z. This becomes clearer when we write down what is happening into words. x is greater than y and y is greater than z. So x is greater than both y and z.

The relation is-not-equal "≠" is not transitive. If x ≠ y and y ≠ z then we might have x = z or x ≠ z (for example 1 ≠ 2 and 2 ≠ 3 and 1 ≠ 3 but 0 ≠ 1 and 1 ≠ 0 and 0 = 0).

In the arrow diagram, every arrow between two values a and b, and b and c, has an arrow going straight from a to c.

Antisymmetric

editA relation is antisymmetric if we observe that for all values a and b:

- a R b and b R a implies that a=b

Notice that antisymmetric is not the same as "not symmetric."

Take the relation greater than or equal to, "≥" If x ≥ y, and y ≥ x, then y must be equal to x. a relation is anti-symmetric if and only if a∈A, (a,a)∈R

Trichotomy

editA relation satisfies trichotomy if we observe that for all values a and b it holds true that: aRb or bRa

The relation is-greater-or-equal satisfies since, given 2 real numbers a and b, it is true that whether a ≥ b or b ≥ a (both if a = b).

Problem set

editGiven the above information, determine which relations are reflexive, transitive, symmetric, or antisymmetric on the following - there may be more than one characteristic. (Answers follow.) x R y if

- x = y

- x < y

- x2 = y2

- x ≤ y

Answers

edit- Symmetric, Reflexive, and transitive

- Transitive, Trichotomy

- Symmetric, Reflexive, and transitive (x2 = y2 is just a special case of equality, so all properties that apply to x = y also apply to this case)

- Reflexive, Transitive and Antisymmetric (and satisfying Trichotomy)

Equivalence relations

editWe have seen that certain common relations such as "=", and congruence (which we will deal with in the next section) obey some of these rules above. The relations we will deal with are very important in discrete mathematics, and are known as equivalence relations. They essentially assert some kind of equality notion, or equivalence, hence the name.

Characteristics of equivalence relations

editFor a relation R to be an equivalence relation, it must have the following properties, viz. R must be:

- symmetric

- transitive

- reflexive

(A helpful mnemonic, S-T-R)

In the previous problem set you have shown equality, "=", to be reflexive, symmetric, and transitive. So "=" is an equivalence relation.

We denote an equivalence relation, in general, by .

Example proof

editSay we are asked to prove that "=" is an equivalence relation. We then proceed to prove each property above in turn (Often, the proof of transitivity is the hardest).

- Reflexive: Clearly, it is true that a = a for all values a. Therefore, = is reflexive.

- Symmetric: If a = b, it is also true that b = a. Therefore, = is symmetric

- Transitive: If a = b and b = c, this says that a is the same as b which in turn is the same as c. So a is then the same as c, so a = c, and thus = is transitive.

Thus = is an equivalence relation.

Partitions and equivalence classes

editIt is true that when we are dealing with relations, we may find that many values are related to one fixed value.

For example, when we look at the quality of congruence, which is that given some number a, a number congruent to a is one that has the same remainder or modulus when divided by some number n, as a, which we write

- a ≡ b (mod n)

and is the same as writing

- b = a+kn for some integer k.

(We will look into congruences in further detail later, but a simple examination or understanding of this idea will be interesting in its application to equivalence relations)

For example, 2 ≡ 0 (mod 2), since the remainder on dividing 2 by 2 is in fact 0, as is the remainder on dividing 0 by 2.

We can show that congruence is an equivalence relation (This is left as an exercise, below Hint use the equivalent form of congruence as described above).

However, what is more interesting is that we can group all numbers that are equivalent to each other.

With the relation congruence modulo 2 (which is using n=2, as above), or more formally:

- x ~ y if and only if x ≡ y (mod 2)

we can group all numbers that are equivalent to each other. Observe:

This first equation above tells us all the even numbers are equivalent to each other under ~, and all the odd numbers under ~.

We can write this in set notation. However, we have a special notation. We write:

- [0]={0,2,4,...}

- [1]={1,3,5,...}

and we call these two sets equivalence classes.

All elements in an equivalence class by definition are equivalent to each other, and thus note that we do not need to include [2], since 2 ~ 0.

We call the act of doing this 'grouping' with respect to some equivalence relation partitioning (or further and explicitly partitioning a set S into equivalence classes under a relation ~). Above, we have partitioned Z into equivalence classes [0] and [1], under the relation of congruence modulo 2.

Problem set

editGiven the above, answer the following questions on equivalence relations (Answers follow to even numbered questions)

- Prove that congruence is an equivalence relation as before (See hint above).

- Partition {x | 1 ≤ x ≤ 9} into equivalence classes under the equivalence relation

Answers

edit2. [0]={6}, [1]={1,7}, [2]={2,8}, [3]={3,9}, [4]={4}, [5]={5}

Partial orders

editWe also see that "≥" and "≤" obey some of the rules above. Are these special kinds of relations too, like equivalence relations? Yes, in fact, these relations are specific examples of another special kind of relation which we will describe in this section: the partial order.

As the name suggests, this relation gives some kind of ordering to numbers.

Characteristics of partial orders

editFor a relation R to be a partial order, it must have the following three properties, viz R must be:

- reflexive

- antisymmetric

- transitive

(A helpful mnemonic, R-A-T)

We denote a partial order, in general, by .

Question:

- Suppose R is a relation on a set of integers Z then prove that R is a partial order relation on Z iff a=b raise to power r.

Example proof

editSay we are asked to prove that "≤" is a partial order. We then proceed to prove each property above in turn (Often, the proof of transitivity is the hardest).

Reflexive

editClearly, it is true that a ≤ a for all values a. So ≤ is reflexive.

Antisymmetric

editIf a ≤ b, and b ≤ a, then a must be equal to b. So ≤ is antisymmetric

Transitive

editIf a ≤ b and b ≤ c, this says that a is less than b and c. So a is less than c, so a ≤ c, and thus ≤ is transitive.

Thus ≤ is a partial order.

Problem set

editGiven the above on partial orders, answer the following questions

- Prove that divisibility, |, is a partial order (a | b means that a is a factor of b, i.e., on dividing b by a, no remainder results).

- Prove the following set is a partial order: (a, b) (c, d) implies ab≤cd for a,b,c,d integers ranging from 0 to 5.

Answers

edit2. Simple proof; Formalization of the proof is an optional exercise.

- Reflexivity: (a, b) (a, b) since ab=ab.

- Antisymmetric: (a, b) (c, d) and (c, d) (a, b) since ab≤cd and cd≤ab imply ab=cd.

- Transitive: (a, b) (c, d) and (c, d) (e, f) implies (a, b) (e, f) since ab≤cd≤ef and thus ab≤ef

Posets

editA partial order imparts some kind of "ordering" amongst elements of a set. For example, we only know that 2 ≥ 1 because of the partial ordering ≥.

We call a set A, ordered under a general partial ordering , a partially ordered set, or simply just poset, and write it (A, ).

Terminology

editThere is some specific terminology that will help us understand and visualize the partial orders.

When we have a partial order , such that a b, we write to say that a but a ≠ b. We say in this instance that a precedes b, or a is a predecessor of b.

If (A, ) is a poset, we say that a is an immediate predecessor of b (or a immediately precedes b) if there is no x in A such that a x b.

If we have the same poset, and we also have a and b in A, then we say a and b are comparable if a b or b a. Otherwise they are incomparable.

Hasse diagrams

editHasse diagrams are special diagrams that enable us to visualize the structure of a partial ordering. They use some of the concepts in the previous section to draw the diagram.

A Hasse diagram of the poset (A, ) is constructed by

- placing elements of A as points

- if a and b ∈ A, and a is an immediate predecessor of b, we draw a line from a to b

- if a b, put the point for a lower than the point for b

- not drawing loops from a to a (this is assumed in a partial order because of reflexivity)

Operations on Relations

editThere are some useful operations one can perform on relations, which allow to express some of the above mentioned properties more briefly.

Inversion

editLet R be a relation, then its inversion, R-1 is defined by

R-1 := {(a,b) | (b,a) in R}.

Concatenation

editLet R be a relation between the sets A and B, S be a relation between B and C. We can concatenate these relations by defining

R • S := {(a,c) | (a,b) in R and (b,c) in S for some b out of B}

Diagonal of a Set

editLet A be a set, then we define the diagonal (D) of A by

D(A) := {(a,a) | a in A}

Shorter Notations

editUsing above definitions, one can say (lets assume R is a relation between A and B):

R is transitive if and only if R • R is a subset of R.

R is reflexive if and only if D(A) is a subset of R.

R is symmetric if R-1 is a subset of R.

R is antisymmetric if and only if the intersection of R and R-1 is D(A).

R is asymmetric if and only if the intersection of D(A) and R is empty.

R is a function if and only if R-1 • R is a subset of D(B).

In this case it is a function A → B. Let's assume R meets the condition of being a function, then

R is injective if R • R-1 is a subset of D(A).

R is surjective if {b | (a,b) in R} = B.

Functions

editA function is a relationship between two sets of numbers. We may think of this as a mapping; a function maps a number in one set to a number in another set. Notice that a function maps values to one and only one value. Two values in one set could map to one value, but one value must never map to two values: that would be a relation, not a function.

|

For example, if we write (define) a function as:

then we say:

- 'f of x equals x squared'

and we have

and so on.

This function f maps numbers to their squares.

Range and codomain

editIf D is a set, we can say

which forms a née of f is usually a subset of a larger set. This set is known as the codomain of a function. For example, with the function f(x)=cos x, the range of f is [-1,1], but the codomain is the set of real numbers.

Notations

editWhen we have a function f, with domain D and range R, we write:

If we say that, for instance, x is mapped to x2, we also can add

Notice that we can have a function that maps a point (x,y) to a real number, or some other function of two variables -- we have a set of ordered pairs as the domain. Recall from set theory that this is defined by the Cartesian product - if we wish to represent a set of all real-valued ordered pairs we can take the Cartesian product of the real numbers with itself to obtain

- .

When we have a set of n-tuples as part of the domain, we say that the function is n-ary (for numbers n=1,2 we say unary, and binary respectively).

Other function notation

editFunctions can be written as above, but we can also write them in two other ways. One way is to use an arrow diagram to represent the mappings between each element. We write the elements from the domain on one side, and the elements from the range on the other, and we draw arrows to show that an element from the domain is mapped to the range.

For example, for the function f(x)=x3, the arrow diagram for the domain {1,2,3} would be:

Another way is to use set notation. If f(x)=y, we can write the function in terms of its mappings. This idea is best to show in an example.

Let us take the domain D={1,2,3}, and f(x)=x2. Then, the range of f will be R={f(1),f(2),f(3)}={1,4,9}. Taking the Cartesian product of D and R we obtain F={(1,1),(2,4),(3,9)}.

So using set notation, a function can be expressed as the Cartesian product of its domain and range.

f(x)

This function is called f, and it takes a variable x. We substitute some value for x to get the second value, which is what the function maps x to.

Types of functions

editFunctions can either be one to one (injective), onto (surjective), or bijective.

INJECTIVE Functions are functions in which every element in the domain maps into a unique elements in the codomain.

SURJECTIVE Functions are functions in which every element in the codomain is mapped by an element in the domain.

'BIJECTIVE Functions are functions that are both injective and surjective.

---onto functions a function f form A to B is onto ,

Number theory

editIntroduction

edit'Number theory' is a large encompassing subject in its own right. Here we will examine the key concepts of number theory.

Unlike real analysis and calculus which deals with the dense set of real numbers, number theory examines mathematics in discrete sets, such as N or Z. If you are unsure about sets, you may wish to revisit Set theory.

Number Theory, the study of the integers, is one of the oldest and richest branches of mathematics. Its basic concepts are those of divisibility, prime numbers, and integer solutions to equations -- all very simple to understand, but immediately giving rise to some of the best known theorems and biggest unsolved problems in mathematics. The Theory of Numbers is also a very interdisciplinary subject. Ideas from combinatorics (the study of counting), algebra, and complex analysis all find their way in, and eventually become essential for understanding parts of number theory. Indeed, the greatest open problem in all mathematics, the Riemann Hypothesis, is deeply tied into Complex Analysis. But never fear, just start right into Elementary Number Theory, one of the warmest invitations to pure mathematics, and one of the most surprising areas of applied mathematics!

Divisibility

editNote that in R, Q, and C, we can divide freely, except by zero. This property is often known as closure -- the quotient of two rationals is again a rational, etc.. However, if we move to performing mathematics purely in a set such as Z, we come into difficulty. This is because, in the integers, the result of a division of two integers might not be another integer. For example, we can of course divide 6 by 2 to get 3, but we cannot divide 6 by 5, because the fraction 6/5 is not in the set of integers.

However we can introduce a new relation where division is defined. We call this relation divisibility, and if is an integer, we say:

- divides

- is a factor of

- is a multiple of

- is divisible by

Formally, if there exists an integer such that then we say that divides and write . If does not divide then we write :

Proposition. The following are basic consequences of this definition. Let a, b, and c be integers:

- (a) If a|b then a|(bc).

- (b) If a|b and b|c, then a|c.

- (c) If a|b and a|c, then for any integers x and y, a|(xb+yc) -- in other words a divides any linear combination of its multiples.

- (d) If both a|b and b|a, then a = b or a = -b.

- (e) If c is not 0, then a|b is equivalent to ca|cb.

Quotient and divisor theorem

editFor any integer n and any k > 0, there is a unique q and r such that:

- n = qk + r (with 0 ≤ r < k)

Here n is known as dividend.

We call q the quotient, r the remainder, and k the divisor.

It is probably easier to recognize this as division by the algebraic re-arrangement:

- n/k = q + r/k (0 ≤ r/k < 1)

Modular arithmetic

editWhat can we say about the numbers that divide another? Pick the number 8 for example. What is the remainder on dividing 1 by 8? Using the division theorem above

- 0 = 8*0 + 0

- 1 = 8*0 + 1

- 2 = 8*0 + 2

- :

- 8 = 8*1 + 0

- 9 = 8*1 + 1

- 10 = 8 * 1 + 2

- :

- and so on

We have a notation for the remainders, and can write the above equations as

- 0 mod 8 = 0

- 1 mod 8 = 1

- 2 mod 8 = 2

- 3 mod 8 = 3

- 4 mod 8 = 4

- 5 mod 8 = 5

- 6 mod 8 = 6

- 7 mod 8 = 7

- 8 mod 8 = 0

- 9 mod 8 = 1

- 10 mod 8 = 2

- :

We can also write

- 1 ≡ 1 (mod 8)

- 2 ≡ 2 (mod 8)

- 3 ≡ 3 (mod 8)

- 4 ≡ 4 (mod 8)

- 5 ≡ 5 (mod 8)

- 6 ≡ 6 (mod 8)

- 7 ≡ 7 (mod 8)

- 8 ≡ 0 (mod 8)

- 9 ≡ 1 (mod 8)

- 10 ≡ 2 (mod 8)

- :

These notations are all short for

- a = 8k+r for some integer k.

So x ≡ 1 (mod 8), for example, is the same as saying

- x = 8k+1

Observe that the remainder here, in comparing it with the division algorithm is 1. x ≡ 1 (mod 8) asks what numbers have the remainder 1 on division by 8? Clearly the solutions are x=8×0+1, 8×1+1,... = 1, 9, ...

Often the entire set of remainders on dividing by n - which we say modulo n - are interesting to look at. We write this set Zn. Note that this set is finite. The remainder on dividing 9 by 8 is 1 - the same as dividing 1 by 8. So in a sense 9 is really "the same as" 1. In fact, the relation "≡"

- x ≡ y iff x mod n = y mod n.

is an equivalence relation. We call this relation congruence. Note that the equivalence classes defined by congruence are precisely the elements of Zn.

We can find some number a modulo n (or we say a congruent to n) by finding its decomposition using the division algorithm.

Addition, subtraction, and multiplication work in Zn - for example 3 + 6 (mod 8) = 9 (mod 8) = 1 (mod 8). The numbers do look strange but they follow many normal properties such as commutativity and associativity.

If we have a number greater than n we often reduce it modulo n first - again using the division algorithm. For example if we want to find 11+3 mod 8, its often easier to calculate 3 + 3 (mod 8) rather than reducing 14 mod 8. A trick that's often used is that, say, if we have 6 + 7 (mod 8) we can use negative numbers instead so the problem becomes -2 + -1 = -3 = 5 (mod 8).

We often use the second notation when we want to look at equations involving numbers modulo some n. For example, we may want to find a number x such that

- 3x ≡ 5 (mod 8)

We can find solutions by trial substitution (going through all the numbers 0 through 7), but what if the moduli are very large? We will look at a more systematic solution later.

Note: we often say that we are working in Zn and use equals signs throughout. Familiarize yourself with the three ways of writing modular equations and expressions.

The Consistency of Modular Arithmetic

editLet denote an arbitrary base. Given an arbitrary integer , the sequence of integers are all congruent to each other modulo :

In modular arithmetic, two integers and that are congruent modulo () both "represent" the same quantity from . It should be possible to substitute an arbitrary integer in place of integer provided that .

This means that:

- Given arbitrary integers and , if and , then .

Since , there exists an integer such that .

Since , there exists an integer such that .

It can be derived that so therefore .

- Given arbitrary integers and , if and , then .

Since , there exists an integer such that .

Since , there exists an integer such that .

It can be derived that so therefore .

Number Bases

editConverting between various number bases is one of the most tedious processes in mathematics.

The numbers that are generally used in transactions are all in base-10. This means that there are 10 digits that are used to describe a number. These ten digits are {0,1,2,3,4,5,6,7,8,9}.

Similarly, base-4 has 4 digits {0,1,2,3} and base-2 has two digits {0,1}. Base two is sometimes referred to as Binary.

There are also bases greater than 10. For these bases, it is customary to use letters to represent digits greater than 10. An example is Base-16 (Hexadecimal). The digits used in this base are {0,1,2,3,4,5,6,7,8,9,A,B,C,D,E,F}.

In order to convert between number bases, it is critical that one knows how to divide numbers and find remainders.

To convert from decimal to another base one must simply start dividing by the value of the other base, then dividing the result of the first division and overlooking the remainder, and so on until the base is larger than the result (so the result of the division would be a zero). Then the number in the desired base is the remainders read from end to start.

The following shows how to convert a number (105) which is in base-10 into base-2.

| Operation | Remainder |

|---|---|

| 105 / 2 = 52 | 1 |

| 52 / 2 = 26 | 0 |

| 26 / 2 = 13 | 0 |

| 13 / 2 = 6 | 1 |

| 6 / 2 = 3 | 0 |

| 3 / 2 = 1 | 1 |

| 1 / 2 = 0 | 1 |

Answer : 1101001

After finishing this process, the remainders are taken and placed in a row (from bottom to top) after the final quotient (1101001, in this example) is shown as the base-2 equivalent of the number 105.

To sum up the process, simply take the original number in base 10, and divide that number repeatedly, keeping track of the remainders, until the quotient becomes less than the numerical value of the base.

This works when converting any number from base-10 to any base. If there are any letters in the base digits, then use the letters to replace any remainder greater than 9. For example, writing 11(of base-10) in base 14.

| Operation | Remainder |

|---|---|

| 11 / 14 = 0 | B (=11) |

Answer: B

As 11 is a single remainder, it is written as a single digit. Following the pattern {10=A, 11=B, 12=C...35=Z}, write it as B. If you were to write "11" as the answer, it would be wrong, as "11" Base-14 is equal to 15 in base-10!

In order to convert from a number in any base back to base ten, the following process should be used:

Take the number 3210 (in base-10). In the units place (100), there is a 0. In the tens place (101), there is a 1. In the hundreds place (102), there is a 2. In the thousands place (103), there is a 3.

The formula to find the value of the above number is:

3×103 + 2×102 + 1×101 + 0×100 = 3000 + 200 + 10 + 0 = 3210.

The process is similar when converting from any base to base-10. For example, take the number 3452 (in base-6). In the units place (60), there is a 2. In the sixths place (61) there is a 5. In the thirty-sixths place (62), there is a 4. In the 216th place (63), there is a 3.

The formula to find the value of the above number (in base-10) is:

3×63 + 4×62 + 5×61 + 2×60 = 648 + 144 + 30 + 2 = 824.

The value of 3452 (base-6) is 824 in base-10.

A more efficient algorithm is to add the left-most digit and multiply by the base, and repeat with the next digit and so on.

((3 * 6 + 4) * 6 + 5) * 6 + 2 = 824

The processes to convert between number bases may seem difficult at first, but become easy if one practices often.

Prime numbers

editPrime numbers are the building blocks of the integers. A prime number is a positive integer greater than one that has only two divisors: 1, and the number itself. For example, 17 is prime because the only positive integers that divide evenly into it are 1 and 17. The number 6 is not a prime since more than two divisors 1, 2, 3, 6 divide 6. Also, note that 1 is not a prime since 1 has only one divisor.

Some prime numbers

editThe prime numbers as a sequence begin

- 2, 3, 5, 7, 11, 13, 17, 19, 23, ...

Euclid's Proof that There are Infinitely Many Primes

editThe Greek mathematician Euclid gave the following elegant proof that there are an infinite number of primes. It relies on the fact that all non-prime numbers --- composites --- have a unique factorization into primes.

Euclid's proof works by contradiction: we will assume that there are a finite number of primes, and show that we can derive a logically contradictory fact.

So here we go. First, we assume that that there are a finite number of primes:

- p1, p2, ... , pn

Now consider the number M defined as follows:

- M = 1 + p1 * p2 * ... * pn

There are two important --- and ultimately contradictory --- facts about the number M:

- It cannot be prime because pn is the biggest prime (by our initial assumption), and M is clearly bigger than pn. Thus, there must be some prime p that divides M.

- It is not divisible by any of the numbers p1, p2, ..., pn. Consider what would happen if you tried to divide M by any of the primes in the list p1, p2, ... , pn. From the definition of M, you can tell that you would end up with a remainder of 1. That means that p --- the prime that divides M --- must be bigger than any of p1, ..., pn.

Thus, we have shown that M is divisible by a prime p that is not on the finite list of all prime. And so there must be an infinite number of primes.

These two facts imply that M must be divisible by a prime number bigger than pn. Thus, there cannot be a biggest prime.

Note that this proof does not provide us with a direct way to generate arbitrarily large primes, although it always generates a number which is divisible by a new prime. Suppose we know only one prime: 2. So, our list of primes is simply p1=2. Then, in the notation of the proof, M=1+2=3. We note that M is prime, so we add 3 to the list. Now, M = 1 +2 *3 = 7. Again, 7 is prime. So we add it to the list. Now, M = 1+2*3*7 = 43: again prime. Continuing in this way one more time, we calculate M = 1+2*3*7*43 = 1807 =13*139. So we see that M is not prime.

Viewed another way: note that while 1+2, 1+2*3, 1+2*3*5, 1+2*3*5*7, and 1+2*3*5*7*11 are prime, 1+2*3*5*7*11*13=30031=59*509 is not.

Testing for primality

editThere are a number of simple and sophisticated primality tests. We will consider some simple tests here. In upper-level courses we will consider some faster and more sophisticated methods to test whether a number is prime.

Inspection

editThe most immediate and simple test to eliminate a number n as a prime is to inspect the units digit or the last digit of a number.

If the number n ends in an even number 0, 2, 4, 6, 8 we can show that number n cannot be a prime. For example, take n = 87536 = 8753(10) + 6. Since 10 is divisible by 2 and 6 is divisible by 2 then 87536 must be divisible by 2. In general, any even number can be expressed in the form n = a*10 + b, where b = 0, 2, 4, 6, 8. Since 10 is divisible by 2 and b is divisible by 2 then n = a*10 + b is divisible by 2. Consequently, any number n which ends in an even number such as 7777732 or 8896 is divisible by 2 so n is not a prime.

In a similar type of argument, if a number n ends in a 5 we can show the number n cannot be a prime. If the last digit of n, call it b, is a 5 we can express n in the form n = a*10 + b, where b = 5. Since 10 is divisible by 5 and b = 5 is divisible by 5 then n = a*10 + b is divisible by 5. Hence, any number n which ends in a 5 such as 93475 is divisible by 5 so n is not a prime.

Thus, if a number greater than 5 is a prime it must end with either a 1, 3, 7, or 9. Note that this does not mean all numbers that end in a 1, 3, 7, or 9 are primes. For example, while the numbers 11, 23, 37, 59 are primes, the numbers 21 = 3*7, 33 = 3*11, 27 = 3*9, 39 = 3*13 are not primes. Consequently, if a number ends in a 1, 3, 7, or 9 we have to test further.

Trial Division Method

editTo test if a number n that ends in a 1, 3, 7, or 9 is prime, we could simply try the smallest prime number and try to divide it in n. If that doesn't divide, we would take the next largest prime number and try again etc. Certainly, if we took all primes numbers in this manner that were less than n and we could not divide n then we would be justified in saying n is prime. However, it can be shown that you don't have to take all primes smaller than n to test if n is prime. We can stop earlier by using the Trial Division Method.

The justification of the Trial Division Method is if a number n has no divisors less than or equal to then n must be a prime. We can show this by contradiction. Let us assume n has no divisors less than or equal to . If n is not a prime, there must be two numbers a and b such that . In particular, by our assumption and . But then . Since a number can not be greater than itself the number n must be a prime.

Trial Division Method is a method of primality testing that involves taking a number n and then sequentially dividing it by primes up to .

For example, is 113 prime? is approximately 10.63... We only need to test whether 2, 3, 5, 7 divide 113 cleanly (leave no remainder, i.e., the quotient is an integer).

- 113/2 is not an integer since the last digit is not even.

- 113/3 (=37.666...) is not an integer.

- 113/5 is not an integer since the last digit does not end in a 0 or 5.

- 113/7 (=16.142857...) is not an integer.

So we need not look at any more primes such as 11, 13, 17 etc. less than 113 to test, since 2, 3, 5, 7 does not divide 113 cleanly, 113 is prime.

Notice that after rejecting 2 and 3 as a divisor, we next considered the next prime number 5 and not the next number 4. We know not to consider 4 because we know 2 does not divide 113. If 2 cannot divide 113 then certainly 4 cannot because if 4 divided 113 and since 2 divides 4 then 2 would divide 113. So we only use the next cheapest available prime to test not the next consecutive number.

If we test 91 we get,

- 91/2 is not an integer since that last digit is not even.

- 91/3= (30.333) is not an integer.

- 91/5= is not an integer since the last digit does not end in a 0 or 5.

- 91/7=13 is an integer

So we know since 7 divides 91, 91 is not a prime.

Trial division is normally only used for relatively small numbers due to its inefficiency. However this technique has the two advantages that firstly once we have tested a number we know for sure that it is prime and secondly if a number is not prime it also gives us the number's factors.

To obtain a few small primes, it may be best to use the Sieve of Eratosthenes than to test each number sequentially using trial division. The Sieve of Eratosthenes method is basically a process of finding primes by elimination. We start by taking a list of consecutive numbers say 1 to 100. Cross out the number 1 because the number is not prime. Take the next least uncrossed off number which is 2 and circle it. Now cross out all multiples of 2 on the list. Next take the next least uncircled number which is 3. Circle the number 3 and cross out all multiples of 3. The next least uncircled number should be 5 since 4 is a multiple of 2 and should have been crossed off. Circle the number 5 and cross out all multiples of 5. The next least uncircled number should be a 7 since 6 is a multiple of 2. Circle the 7 and mark off all multiples of 7. Now the next uncrossed off number should be 11 since 8,9,10 is a multiple of 2, 3, and 2. If we continue in this manner what is left is the circled numbers which are primes. But notice we can actually stop now and circle all the unmarked numbers after crossing off multiples of 7 because of the result that since any number less than 100 which is not prime must be divisible by 2, 3, 5, or 7.

The Fundamental Theorem of Arithmetic

editThe Fundamental Theorem of Arithmetic is an important theorem relating to the factorization of numbers. The theorem basically states that every positive integer can be written as the product of prime numbers in a unique way (ignoring reordering the product of prime numbers).

In particular, The Fundamental Theorem of Arithmetic means any number such as 1,943,032,663 is either a prime or can be factored into a product of primes. If a number such as 1,943,032,663 can be factored into primes such as 11×13×17×19×23×31×59 it is futile to try to find another different combination of prime numbers that will also give you the same number.

To make the theorem work even for the number 1, we think of 1 as being the product of zero prime numbers.

More formally,

- For all n∈N

- n=p1p2p3...

- where the pi are all prime numbers, and can be repeated.

Here are some examples.

- 4 = 2 × 2 = 22

- 12 = 2 × 2 × 3 = 22 × 3

- 11 = 11.

A proof of the Fundamental Theorem of Arithmetic will be given after Bezout's identity has been established.

LCM and GCD

editTwo characteristics we can determine between two numbers based on their factorizations are the lowest common multiple, the LCM and greatest common divisor, the GCD (also greatest common factor, GCF)

LCM

editThe lowest common multiple, or the least common multiple, for two numbers a and b is the smallest number designated by LCM(a,b) that is divisible by both the number a and the number b. We can find LCM(a,b) by finding the prime factorization of a and b and choosing the maximum power for each prime factor.

In another words, if the number a factors to , and the number b factors to , then LCM(a,b) = where for i = 1 to n.

An example, let us see the process on how we find lowest common multiple for 5500 and 450 which happens to be 49500. First, we find the prime factorization for 5500 and 450 which is

- 5500=22 53 11

- 450=2 3 2 52

Notice the different primes we came up for both the number 5500 and the number 450 are 2, 3, 5, and 11. Now let us express 5500 and 450 completely in a product of these primes raised to the appropriate power.

- 5500=22 53 11 = 22 30 53 111

- 450=2 32 52 = 21 32 52 110

The LCM(5500,450) is going to be in the form 2? 3? 5? 11?. All we now have to do is find what the powers of each individual prime will be.