OpenMP/Overview

This book is about OpenMP, a language extension to C (and C++) that allows for easy parallel programming. To understand what OpenMP makes possible, it is first important to know what parallel programming is and what it isn't.

Parallel programming means writing software that operates on several compute devices at the same time. For the purpose of this book, a "compute device" is primarily a processor (CPU) or a processor core within a multicore CPU, which is the focus of OpenMP 3. In other settings, a compute device may mean a full-blown computer within a cluster of computers, or a different type of device such as a graphic processing unit (GPU). An extension of OpenMP to cluster computing has been devised by Intel, and OpenMP 4 adds supports for executing code on non-CPU devices, but these are outside the scope of this book.

Parallel programming is mainly done to speed up computations that have to be fast. Ideally, if you have n compute devices (cores) to run a program, you'd want the program to be n times as fast as when it runs on a single device. This ideal situation never occurs in practice because the compute devices need to communicate with each other, sending and waiting for messages such as "give me the result of your computation". Still, parallel programming can speed up many applications by a factor almost equal to n.

Parallel programming should be distinguished from concurrent programming, which means breaking down a program into processes that conceptually run at the same time. There is considerable overlap between parallel and concurrent programs: both involve decomposing a task into distinct subtasks that can be executed separately, and concurrent programs can benefit from having their tasks run on multiple compute devices in parallel. However, full concurrent programming is a different art from that of parallel programming in that usually, different types of communication occur between concurrent tasks than between parallel tasks.

OpenMP adds support for parallel programming to C in a very clean way. Unlike thread libraries, little change is needed to existing programs to have them run on multiple processors in parallel. In fact, the basic constructs of OpenMP are so non-intrusive that programs using them but compiled by a compiler that doesn't support OpenMP will still work (although sequentially, of course). To see what that means, consider the following C function:

void broadcast_sin(double *a, size_t n)

{

#pragma omp parallel for

for (size_t i = 0; i < n; i++) {

a[i] = sin(a[i]);

}

}

This function "broadcasts" the sine function over an array, storing its results back into that same array. When OpenMP is enabled in the compiler, it will in fact do so using multiple threads, but without OpenMP, it will still function and produce the exact same effect.

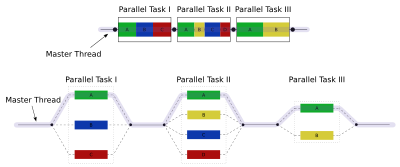

OpenMP programs work according to a so-called fork–join model, illustrated on the right. The OpenMP runtime maintains a thread pool, and every time a parallel section is encountered, it distributes work over the threads in the pool. When all threads are done, sequential execution is resumed.