Microprocessor Design/Interrupts

An interrupt is a condition that causes the microprocessor to temporarily work on a different task, and then later return to its previous task. Interrupts can be internal or external. Internal interrupts, or "software interrupts," are triggered by a software instruction and operate similarly to a jump or branch instruction. An external interrupt, or a "hardware interrupt," is caused by an external hardware module. As an example, many computer systems use interrupt driven I/O, a process where pressing a key on the keyboard or clicking a button on the mouse triggers an interrupt. The processor stops what it is doing, it reads the input from the keyboard or mouse, and then it returns to the current program.

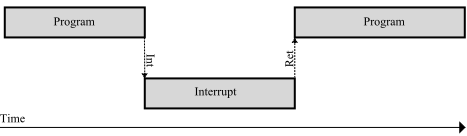

The image below shows conceptually how an interrupt happens:

The grey bars represent the control flow. The top line is the program that is currently running, and the bottom bar is the interrupt service routine (ISR). Notice that when the interrupt (Int) occurs, the program stops executing and the microcontroller begins to execute the ISR. Once the ISR is complete, the microcontroller returns to processing the program where it left off.

Interrupt performance depends on both hardware and software. The two most important properties of interrupt performance are the IRQ latency and throughput.[1]

Modern hardware and software systems are often so complicated that it's practically impossible to deduce interrupt latency ahead of time by looking at source code and datasheets, so often it is directly measured by experiment.[1][2]

Some processors have instructions that take many cycles to execute, making latency worse. Some people suggest avoiding such high-latency instructions.[3]

Other processors are specifically designed to give predictable and lower latency response times.[4]

Interrupting an interrupt

editWhat happens when external hardware requests another interrupt while the processor is already in the middle of executing the ISR for a previous interrupt request?

When the first interrupt was requested, hardware in the processor causes it to finish the current instruction, disable further interrupts, and jump to the interrupt handler.

The processor ignores further interrupts until it gets to the part of the interrupt handler that has the "return from interrupt" instruction, which re-enables interrupts.

If an interrupt request occurs while interrupts were turned off, some processors will immediately jump to that interrupt handler as soon as interrupts are turned back on. With this sort of processor, an interrupt storm "starves" the main loop background task. Other processors execute at least one instruction of the main loop before handling the interrupt,[5][6][7] so the main loop may execute extremely slowly, but at least it never "starves".

A few processors have an interrupt controller that supports "round robin scheduling", which can be used to prevent a different kind of "starvation" of low-priority interrupt handlers.[8]

Instead of a "disable interrupt" instruction that defers interrupts indefinitely, some processors have a "lock" instruction that temporarily defers interrupts for exactly 16 machine instructions, after which deferred interrupts (if any) are automatically taken.[9]

Often novice firmware programmers write interrupt service routines that leave interrupts completely turned off far too long, leading to difficult-to-debug problems when the processor misses interrupts from the same hardware peripheral or unrelated hardware. There are several approaches to writing "interrupt handlers that can be interrupted",[10] including second-level interrupt handlers and nested interrupts.

second-level interrupt handlers

editMany modern general-purpose OSes, including RTOSes, have "second-level interrupt handlers" that run like normal user-level processes -- with interrupts turned on, so not only can they be interrupted by any external hardware interrupt request, they can also be interrupted by the timer that the pre-emptive OS uses to know when to cycle through all the runnable processes.

The first-level interrupt handler is directly triggered by the hardware interrupt, and should be crafted to minimize the time it keeps interrupts turned off before it returns. The FLIH typically stores some data in a buffer and sets some flag that tells the OS to run the corresponding second-level interrupt handler on the data in that buffer.

More details in other Wikibooks: Operating System Design/Scheduling Processes/Preemption#interrupt latency and Embedded_Systems/Interrupts.

nested interrupts

editA few processors support "nested interrupts". The first part of the interrupt handler should be crafted to minimize the time it keeps interrupts turned off, and then it turns interrupts back on as quickly as possible.[2] The rest of the routine, similar to second-level interrupt handlers, doesn't hurt latency because it runs with interrupts turned on. However, this introduces the potential for the same interrupt routine to be triggered again, which requires re-entrant interrupt handlers, which may make interrupt latency worse.[11]

interrupt latency

editFrom large-scale distributed systems to tiny embedded computers, predictably low latency interrupt handling is important.[12]

Hard real-time systems require deterministic (low-jitter) interrupt handling.[13]

Further Reading

edit- ↑ a b Oliver Horst; Johannes Wiesböck; Raphael Wild; Uwe Baumgarten. "Quantifying the Latency and Possible Throughput of External Interrupts on Cyber-Physical Systems".

- ↑ a b Jack Ganssle. "Interrupt Latency".

- ↑ David Kleidermacher. "Minimizing Interrupt Response Time".

- ↑ Peter Harris. "latency measurement".

- ↑ Q. "AVR Guide: Interrupts".

- ↑ G. C. Hill. "ATmega interrupt processing" p. 9

- ↑ Nick Gammon. "Do interrupts interrupt other interrupts on Arduino?". quote: "the processor is designed to guarantee that when it transitions from interrupts not enabled, to interrupts enabled, one more instruction is always executed."

- ↑ "AVR1503: Xplain training - XMEGA Programmable Multi Interrupt Controller".

- ↑ "System 801 Principles of Operation". 1976. p. 59.

- ↑ Michael B. Jones; Stefan Saroiu. "Predictable Scheduling for a Soft Modem".

- ↑ Jim Harrison. "Real Time: Some Notes on Microcontroller Interrupt Latency". quote: "This avoids the need for re-entrant interrupt handlers, which have a negative effect on interrupt latency."

- ↑ Benedict Herzog; Luis Gerhorst; Bernhard Heinloth; Stefan Reif; Timo Hönig; Wolfgang Schröder-Preikschat. "INTspect: Interrupt Latencies in the Linux Kernel". doi:10.1109/SBESC.2018.00021

- ↑ Jonatan Lövgren. "Increasing Performance and Predictability of a Real-Time Kernel Using Hardware Acceleration". 2016.