RAC Attack - Oracle Cluster Database at Home/Setup OCFS2

Prev: Create Cluster

Grid Install (Shared Filesystem) (e)

- Setup OCFS2

- Cluster Verification Utility (Shared Filesystem)

- 11gR2 Bug Workaround

- Install Grid Infrastructure (Shared Filesystem)

- Increase CRS Fencing Timeout (Shared Filesystem)

Next: RAC Install

-

Open a terminal as the root user on collabn1.

- Create mountpoints on both nodes for two OCFS volumes: /u51 and /u52. [root@collabn1 ~]# mkdir /u51 [root@collabn1 ~]# mkdir /u52 [root@collabn1 ~]# ssh collabn2 root@collabn2's password: racattack [root@collabn2 ~]# mkdir /u51 [root@collabn2 ~]# mkdir /u52

- Install and load the OCFS2 packages from the OEL (Oracle Enterprise Linux) installation media and then load the module. Install and load OCFS2 on collabn2 as well. [root@collabn1 ~]# cd /mnt # From Enterprise Linux 5 Disk 3 rpm -Uvh */*/ocfs2-tools-1.* rpm -Uvh */*/ocfs2-*el5-* rpm -Uvh */*/ocfs2console-* [root@collabn1 mnt]# /etc/init.d/o2cb load Loading module "configfs": OK Mounting configfs filesystem at /sys/kernel/config: OK Loading module "ocfs2_nodemanager": OK Loading module "ocfs2_dlm": OK Loading module "ocfs2_dlmfs": OK Creating directory '/dlm': OK Mounting ocfs2_dlmfs filesystem at /dlm: OK [root@collabn1 ~]# ssh collabn2 root@collabn2's password: racattack [root@collabn2 ~]# cd /mnt # From Enterprise Linux 5 Disk 3 rpm -Uvh */*/ocfs2-tools-1.* rpm -Uvh */*/ocfs2-*el5-* rpm -Uvh */*/ocfs2console-* [root@collabn2 mnt]# /etc/init.d/o2cb load Loading module "configfs": OK Mounting configfs filesystem at /sys/kernel/config: OK Loading module "ocfs2_nodemanager": OK Loading module "ocfs2_dlm": OK Loading module "ocfs2_dlmfs": OK Creating directory '/dlm': OK Mounting ocfs2_dlmfs filesystem at /dlm: OK

-

From the terminal window, as root, launch ocfs2console

-

Choose CONFIGURE NODES… from the CLUSTER menu. If you see a notification that the cluster has been started, then acknowledge it by clicking the Close button.

-

Click ADD and enter the collabn1 and the private IP 172.16.100.51. Accept the default port. Click OK to save.

-

Click ADD a second time and enter collabn2 and 172.16.100.52. Then choose to APPLY then click CLOSE to close the window.

-

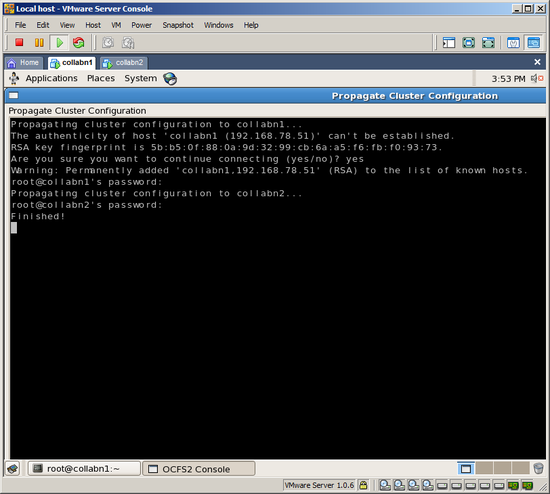

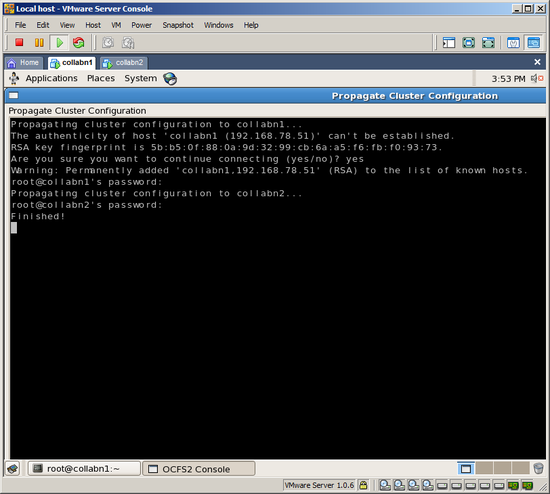

Choose PROPAGATE CONFIGURATION… from the CLUSTER menu. If you are prompted to accept host keys then type YES. Type the root password racattack at the both prompts. When you see the message “Finished!” then press <ALT-C> to close the window.

-

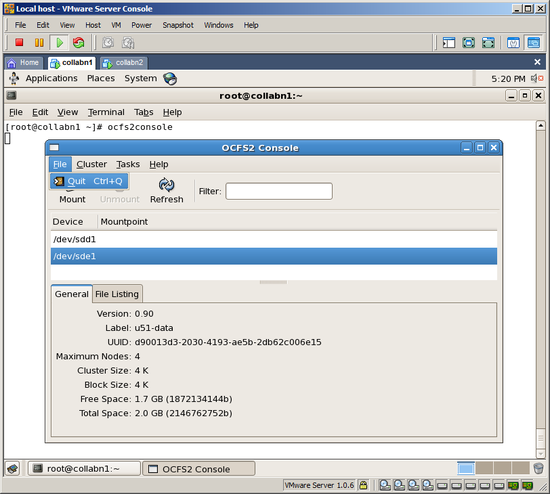

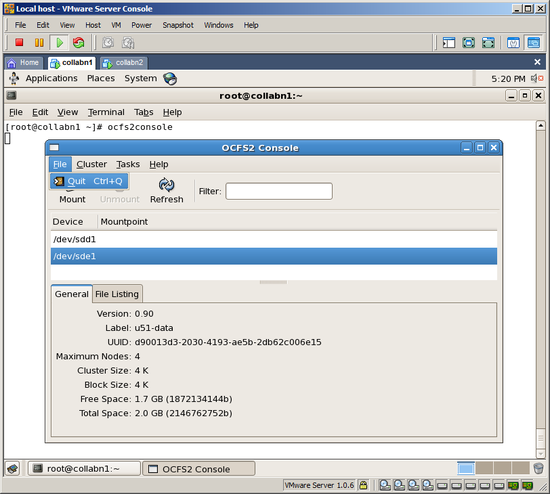

From the TASKS menu, choose FORMAT to create the OCFS filesystem. Select /dev/sdb1 and type the volume label u51-data. Leave the rest of the options at their defaults and click OK to format the volume. Confirm by clicking YES.

-

Repeat the previous step for volume /dev/sdc1 and name it u52-backup.

-

Exit the OCFS2 console by selecting QUIT from the FILE menu.

- Configure OCFS2 on both nodes. We will use a conservative disk heartbeat timeout (300 seconds) because VMware is slow on some laptops. [root@collabn1 mnt]# /etc/init.d/o2cb configure Configuring the O2CB driver. This will configure the on-boot properties of the O2CB driver. The following questions will determine whether the driver is loaded on boot. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Load O2CB driver on boot (y/n) [n]: y Cluster stack backing O2CB [o2cb]: Cluster to start on boot (Enter "none" to clear) [ocfs2]: Specify heartbeat dead threshold (>=7) [31]: 300 Specify network idle timeout in ms (>=5000) [30000]: Specify network keepalive delay in ms (>=1000) [2000]: Specify network reconnect delay in ms (>=2000) [2000]: Writing O2CB configuration: OK Cluster ocfs2 already online [root@collabn1 ~]# ssh collabn2 root@collabn2's password: racattack [root@collabn2 mnt]# /etc/init.d/o2cb configure figuring the O2CB driver. This will configure the on-boot properties of the O2CB driver. The following questions will determine whether the driver is loaded on boot. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Load O2CB driver on boot (y/n) [n]: y Cluster stack backing O2CB [o2cb]: Cluster to start on boot (Enter "none" to clear) [ocfs2]: Specify heartbeat dead threshold (>=7) [31]: 300 Specify network idle timeout in ms (>=5000) [30000]: Specify network keepalive delay in ms (>=1000) [2000]: Specify network reconnect delay in ms (>=2000) [2000]: Writing O2CB configuration: OK Starting O2CB cluster ocfs2: OK

- Reload the O2CB driver on the node where you ran ocfs2console. [root@collabn2 mnt]# exit logout Connection to collabn2 closed. [root@collabn1 mnt]# /etc/init.d/o2cb force-reload Stopping O2CB cluster ocfs2: OK Unmounting ocfs2_dlmfs filesystem: OK Unloading module "ocfs2_dlmfs": OK Unmounting configfs filesystem: OK Unloading module "configfs": OK Loading filesystem "configfs": OK Mounting configfs filesystem at /sys/kernel/config: OK Loading filesystem "ocfs2_dlmfs": OK Mounting ocfs2_dlmfs filesystem at /dlm: OK Starting O2CB cluster ocfs2: OK

- Edit /etc/fstab to add entries for the new file systems. [root@collabn1 ~]# vi /etc/fstab LABEL=u51-data /u51 ocfs2 _netdev,datavolume,nointr 0 0 LABEL=u52-backup /u52 ocfs2 _netdev,datavolume,nointr 0 0

- Mount the volumes and create directories for the oracle database files. [root@collabn1 ~]# mount /u51 [root@collabn1 ~]# mount /u52 [root@collabn1 ~]# mkdir /u51/oradata [root@collabn1 ~]# mkdir /u52/oradata [root@collabn1 ~]# mkdir /u51/cluster [root@collabn1 ~]# chown oracle:dba /u51/oradata /u52/oradata /u51/cluster [root@collabn1 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sdb1 3.3G 279M 3.0G 9% /u51 /dev/sdc1 3.3G 279M 3.0G 9% /u52

- Login to the second node collabn2 as root and repeat these steps there. [root@collabn1 ~]# ssh collabn2 root@collabn2's password: racattack [root@collabn2 ~]# vi /etc/fstab LABEL=u51-data /u51 ocfs2 _netdev,datavolume,nointr 0 0 LABEL=u52-backup /u52 ocfs2 _netdev,datavolume,nointr 0 0 [root@collabn2 ~]# mount /u51 [root@collabn2 ~]# mount /u52 [root@collabn2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sdb1 3.3G 279M 3.0G 9% /u51 /dev/sdc1 3.3G 279M 3.0G 9% /u52 [root@collabn2 ~]# ls -l /u5* /u51: total 0 drwxr-xr-x 2 oracle dba 3896 Sep 26 15:30 cluster drwxr-xr-x 2 root root 3896 Sep 26 15:26 lost+found drwxr-xr-x 2 oracle dba 3896 Sep 26 15:29 oradata /u52: total 0 drwxr-xr-x 2 root root 3896 Sep 26 15:26 lost+found drwxr-xr-x 2 oracle dba 3896 Sep 26 15:30 oradata

- Optionally, examine /var/log/messages and dmesg output for status messages related to OCFS2.